From the creators of calyptia and fluent bit

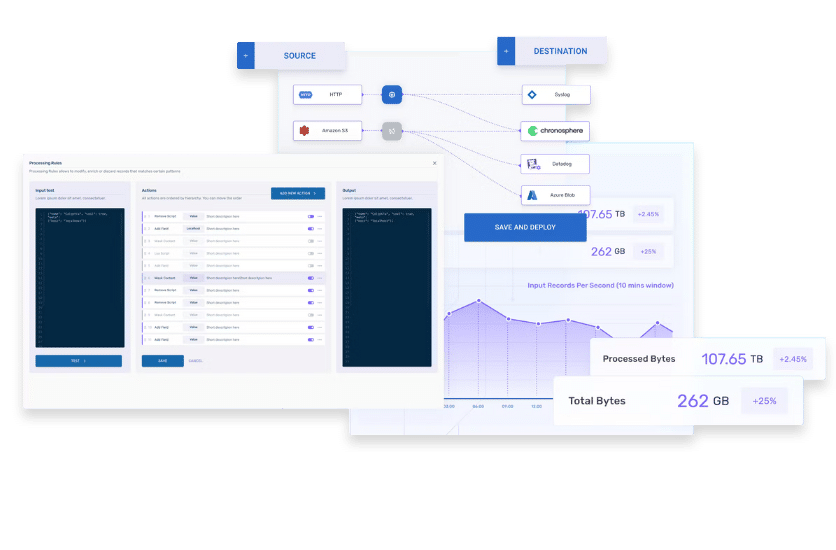

Simplified, End-to-end Observability Pipeline

Chronosphere Telemetry Pipeline, from the creators of Fluent Bit and Calyptia, streamlines log collection, aggregation, transformation, and routing from any source to any destination.

This allows companies who are dealing with high costs and complexity the ability to control their data and scale their growing business needs.

Reduce Volume and Costs

Reduce and enrich logs at the collection point, before they are routed. Filter out unnecessary data, redact sensitive data and add valuable context to your logs. This ensures that you only retain the most relevant data in its most optimized form, reducing log volume and associated storage costs by at least 30%.

Streamline Log Operations

Automate the management of log pipelines with a suite of operations such as automatic load balancing and one-click scaling. These features not only reduce the operational burden on your teams but also enhance system reliability by avoiding disruptions and ensuring continuous data flow.

Highly Resilient and Performant

Chronosphere Telemetry Pipeline is developed by the creators of Fluent Bit, leveraging the proven reliability of the leading open-source log processor. Written in C, it offers unmatched processing speeds and resource efficiency compared to traditional Node.js systems used by other leading solutions.

Telemetry Pipeline is also fully compatible with Kubernetes, making it an ideal choice for modern, cloud native environments.

Accelerate Integration and Insight

Reduce the time it takes to integrate new data sources and destinations from days or months to minutes with Chronosphere Telemetry Pipeline. Built-in integrations empower teams to quickly build new data streams and extract valuable insights faster without the need for custom coding and lengthy adjustments.

Chronosphere Telemetry Pipeline streamlines data management with a drag-and-drop pipeline builder. This approach reduces complexity and enables rapid pipeline deployment.

Automate your operations with Telemetry Pipeline’s suite of automation features. This includes automatic load balancing, automatic retry, automatic healing, one-click scaling, and more, even in Kubernetes environments.

Chronosphere comes with a variety of built-in processing transformations to refine data collection in-flight. These turnkey rules offer extensive customization for aggregation, enrichment, redaction, filtering, and more, all within a user-friendly interface.

Seamlessly integrate with the major cloud providers and the most popular observability and SIEM solutions for quick and easy onboarding. Telemetry Pipeline also provides support for all open standards and signals, including OpenTelemetry and Prometheus

Telemetry Pipeline simplifies fleet operations by automating and centralizing the installation, configuration and maintenance of Fluent Bit agents across thousands of machines. This ensures uniformity and ease of management across your entire environment.

Chronosphere guarantees enterprise-level security and stability, supporting various operating systems (including RHEL, Mac, Windows) and providing consistent updates to maintain a secure environment for data operations.