Holiday on-call duty: present or punishment?

Imagine this: It’s Thanksgiving day and you just crossed the finish line at a Turkey Trot 5K, ready to celebrate, when you check your phone to see 10 missed calls from PagerDuty. Yikes. You forgot about volunteering for a holiday on-call shift…the upside is the page must have escalated to the secondary on-call.

On: Nov 22, 2023

Paige Cruz is a Senior Developer Advocate at Chronosphere passionate about cultivating sustainable on-call practices and bringing folks their aha moment with observability. She started as a software engineer at New Relic before switching to Site Reliability Engineering holding the pager for InVision, Lightstep, and Weedmaps. Off-the-clock you can find her spinning yarn, swooning over alpacas, or watching trash TV on Bravo.

It is not ideal, but this is why you keep a secondary schedule in place. You open Slack to see the current status and if you need to jump in on the investigation — and your stomach drops. The secondary also missed the page and it escalated to the tertiary, the executive rotation, and the CTO is now running incident response. Oops.

This is a real story that happened at a previous company. It would be easy to backseat incident response and blame the primary and secondary for being irresponsible. I recommend leafing through Sidney Dekker’s Stop Blaming if that is your initial reaction.

I see multiple contributing factors in this incident:

- The volunteer opt-in rotation was an experiment to spread the holiday on-call load equitably, established only a few weeks before.

- The shift lengths were 12 hours long, very different from typical week-long rotations. Because regular non-holiday shifts and schedules were stable, the engineers did not have PagerDuty configured to notify them when their shift started. They usually had an entire week on secondary to mentally prepare for an upcoming primary shift.

- Due to the holidays, no one was actively working on or monitoring the system. During regular business hours, plenty of engineers are online to catch early warning signs or hop in to help investigate. During the holidays, engineers not on-call were enjoying the break.

Holidays are the worst time for a SEV-1 incident because of the disrupted routine and reduced availability of colleagues. Incident response during holidays uncovers how much your organization relies on engineering “heroes.” These are the engineers who are always pulled in to help answer questions about a service, the ones who know where the right runbook is and which alerts indicate user-facing issues. The more you rely on those heroes, the more you hinder other engineers’ growth and development. The problem isn’t that your organization has experts in your system; it’s that this expertise is locked away in a few engineers’ heads.

With the end of year looming, what can you do today to turn holiday on-call from punishment to a present? Increasing the observability of your system. Full stop.

The observability platform paradox

Of course, there’s a bit of a paradox here. You need an observability platform to ingest, process, store and analyze signals like events, metrics, traces, and logs, but simply having an observability platform doesn’t mean your system is highly observable.

There is no “instant observability” powder you can sprinkle on your services and immediately generate actionable insights. And having more observability platforms does not equate to higher observability — anyone working for the 60% of organizations that rely on 10 or more observability tools understands this all too well.

Fundamentally, observability influences how effectively you can understand system behavior given the telemetry and tools available. It goes beyond monitoring and delivering the right page at the right time to the right engineer. It serves as a source of truth about your system.

Observability platforms can industrialize institutional knowledge, democratize telemetry data, and empower anyone from the newest engineer to those on-call during the holidays to confidently investigate issues within services they own, their upstream and downstream dependencies, and any other issues — without having to pull in an expert.

How to increase observability

Here are four steps you can take to increase observability with the telemetry and tooling available to you:

1. Link, link, link

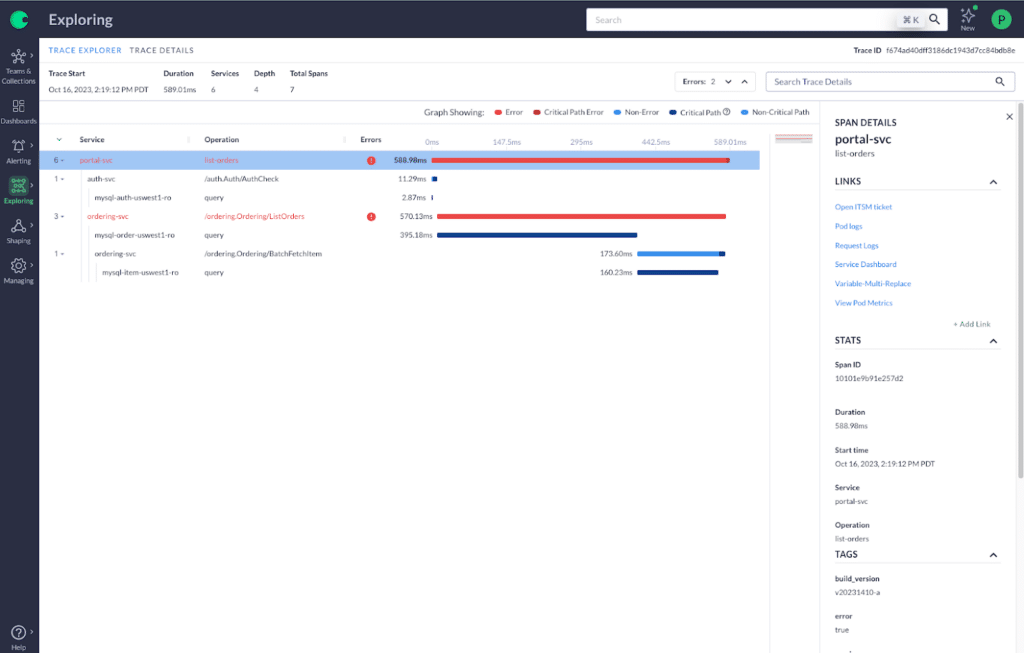

Link your telemetry together both within a tool and across tooling. As you start exploring the data, you want to be able to hop from the original anomaly in a metric chart, to a view of any potential change events, to inspecting a trace waterfall, to reading the logs for a service. Linking telemetry helps you investigate almost at the speed of thought without getting bogged down with exact query syntax for any of the 10 tools you have.

Link runbooks for every paging alert. If an issue is worth paging an engineer over, it is worth outlining the impact and potential mitigations in a runbook. Having runbooks a single click away accelerates your response after a page.

Link to communication channels. With reorgs and frequent team changes, knowing who owns what and how to reach them can be the world’s worst scavenger hunt. Adding links to your team communication channels helps others in your organization get in touch with the correct person quickly.

Link to directly responsible individuals (DRI), often the current primary on-call. If you get paged for an issue but find the error stems from an upstream dependency, knowing who to notify about the impact to your service(s) is the first step in response coordination. Jumpstart their investigation by including relevant context like the query, trace or chart you used to identify the issue.

2. Bring in metric data from other parts of the business

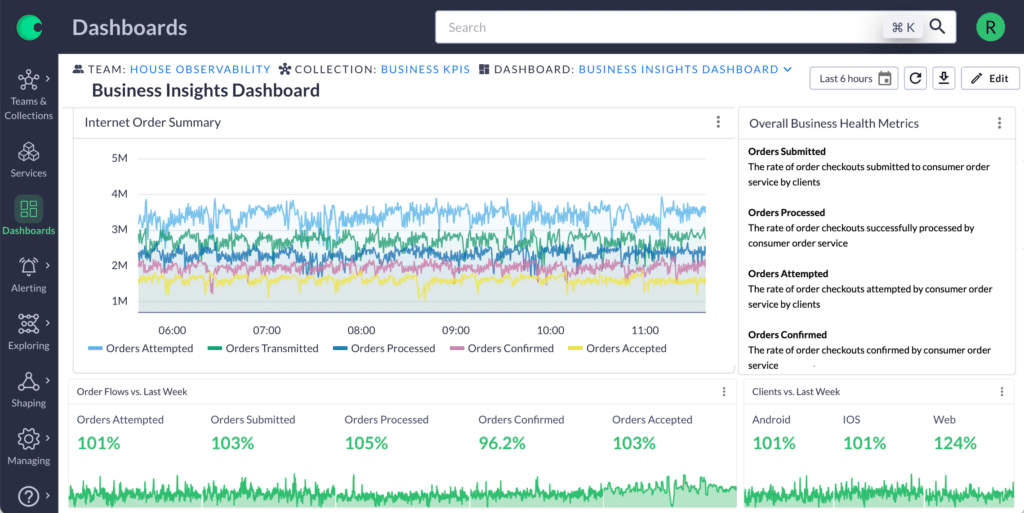

Tie your existing application and infrastructure data to business metrics from other groups such as customer success, revenue or business operations. Tying telemetry to broader organizational goals maximizes observability data’s value. Wouldn’t it be great to see how fixing a bug resulted in 30% fewer support tickets, the volume of orders being placed or how many people are logged in to your product? You know you’re successful if groups outside engineering start loading up dashboards in the same tools you use.

3. Get the most out of events

Tracking events such as deployments, feature flags, infrastructure changes and third-party dependency status atop charts is a game changer. Instead of hunting down the right Slack channel to ask, “Did anyone change something around 2:02 p.m. today?” you can find out by toggling a switch to get events overlaid on a metric chart for faster correlation. You can even instrument business events that place the customer experience front and center, such as onboarding a new customer or sending a new coupon code.

Customize dashboards for critical workflows

Default, out-of-the-box dashboards get you only so far in better understanding your system. Creating a custom dashboard is great if you are constructing complex queries or jumping between several tabs to investigate an issue that spans multiple team or service boundaries.

Making the case for observability

Selling other engineers on the benefits of increasing observability is the easy part; they’re also feeling the pain of wading through multiple tools and manually cobbling together context while investigating.

I can tell you from experience that getting leadership buy-in to influence necessary cultural change and spearhead organization-wide observability initiatives is a challenge without having a “big one,” customer-facing incident that is a severe or long enough to make the C-suite sweat and prioritize investments in observability and the on-call experience.

Leverage the upcoming holiday season as a forcing function for proactive improvement. Your sphere of influence is strongest within your team, so start there (you can even send them this article as a starting point). Spend on-call time on increasing observability for the services and infrastructure you’re responsible for. Share your learnings widely and loudly across the organization. You’re building a precedent for knowledge sharing among teams and showing how observability can be a part of the on-call toolkit.

Put observability on top of your gift wish list

If your current observability tools make it difficult to effectively investigate and understand the system you’re on-call for (holidays or not), it might be time to give yourself an upgrade. Look for features such as:

Linked telemetry: It’s extremely beneficial to link across different telemetry types and to other helpful information, such as a help desk ticket. This prevents spending time hopping between applications to get everything you need.

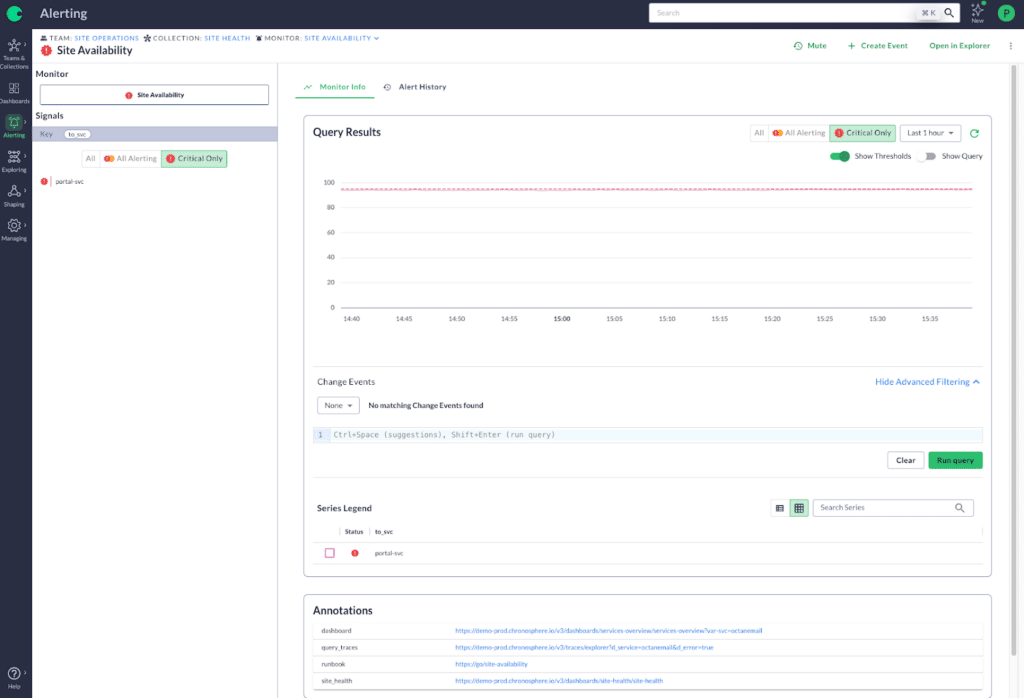

Annotations: Unfortunately, Chronosphere can’t write runbooks just yet. But you can attach annotations to monitors and keep all relevant runbooks and information easily at the bottom of the dashboard.

Change Event Tracking: This function stores all recent integration changes in one place, making it easier to see what’s happening with feature flags, CI/CD, infrastructure events and custom events you set up. With a centralized source, you can quickly catch anomalies in real time – regardless where they are in your system.

Dashboard customization: Every team has different requirements for what data they need regularly. By customizing critical workflow dashboards and translating metric names, you can help non-developers understand what’s going on within your environment and remediate much more quickly.

Every engineer should have telemetry and tooling access that helps them explore the boundaries outside the services they own. Having the right observability vendor and telemetry are essential to support you both on- and off-call. On a daily basis, they help your systems run smoothly. But during the holiday season, they can be a lifesaver when you’re working with fewer staff and changing resources.

And of course, happy h-O11y-days from all of us at Chronosphere!

Share This:

Table Of Contents

Most Recent:

Ready to see it in action?

Request a demo for an in depth walk through of the platform!