More complex applications means more logs

Due to their complexity, scale, and dynamic nature, cloud-native applications require observability. To gain a comprehensive understanding of such systems, we need access to metrics, logs, and traces from all the components, which can help teams identify and remediate issues.

Fluent Bit is a lightweight open source telemetry pipeline that simplifies collecting, processing, and routing telemetry data from any source to any destination, including observability solutions like Chronosphere Platform and object storage services like Amazon S3 and Google Cloud Storage.

Object storage services are a cost-effective and scalable solution for storing and managing the growing volume of logs that our systems produce.

In this blog post, we’ll demonstrate how Fluent Bit can collect logs from your cloud native infrastructure and route them to Amazon S3 for storage to meet compliance and auditing requirements.

Why use Amazon S3 for storing logs?

Amazon S3 is a highly scalable, durable, and secure object storage service provided by AWS. It allows users to store and retrieve data, such as text files, images, and videos, from anywhere on the web.

Here are some reasons why Amazon S3 is particularly effective for storing logs:

- Scalability: Amazon S3 is a highly scalable and durable service that can store virtually unlimited amounts of data. This makes it an ideal solution for applications that generate a large volume of logs, metrics, and traces.

- Cost-effective: With low storage costs and pay-as-you-go pricing, Amazon S3 is a cost-effective option. You can store and retrieve your data without worrying too much about the costs.

- Easy integration: Being an AWS offering, S3 easily integrates with other AWS services, such as Amazon CloudWatch and Amazon OpenSearch Service, allowing you to collect and analyze logs, metrics, and traces in a unified way.

- Secured: Amazon S3 has robust access control and security mechanisms built in to prevent unauthorized access. This ensures that your data is secure and compliant with industry standards and regulations.

- Data durability: Amazon S3 is highly durable and available in case of hardware failures or natural disasters. It does so by storing multiple copies of your data across multiple devices and data centers.

- Analytics: S3 provides built-in analytics capabilities, such as S3 Inventory and S3 Select, that allow you to analyze your data at scale and gain insights into usage patterns, access patterns, and other metrics.

Forwarding logs from Fluent Bit to S3

Fluent Bit utilizes a pluggable architecture that makes it a versatile option for collecting logs. It includes both Input and Output plugins, one of which is the Amazon S3 output plugin that we’ll be using.

We’ll configure Fluent Bit to collect CPU, System, and Kernel logs from a server and push these logs to Amazon S3.

Refer to the Fluent Bit installation documentation to install it on your system.

AWS S3 configuration

The first step here is to create an S3 bucket if you don’t already have one. Once the bucket is created, you need to ensure that the s3:PutObject IAM permission is provided. To do this, navigate to the Permissions tab for the S3 bucket and add the following code snippet under Bucket Policy.

{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": "*"

}]

}This gives allow access to add objects to the bucket.

After this, you’ll need the credentials of the AWS principal which you can create by visiting IAM -> Access Management -> Users and selecting the user. Navigate to the Security Credentials tab and generate access keys. Note down the access key and secret.

If you’re using an Ubuntu system, AWS credentials are stored at /home/<username>/.aws/credentials where you need to update these credentials. You can either edit the file here and add the following lines:

[default]

aws_access_key_id =

aws_secret_access_key = The easier way is to configure AWS CLI on your system. Once configured, fire up a terminal and enter aws configure. In the dialog that appears, provide the access key and secret. This automatically saves the details to the credentials file.

Manning Book: Fluent Bit with Kubernetes

Learn how to optimize observability systems for Kubernetes. Download Fluent Bit with Kubernetes now!

Fluent Bit configuration

Working with S3 requires Fluent Bit to connect to the S3 bucket, which is only possible using credentials. Read this to understand more about how Fluent Bit retrieves AWS credentials.

Since our credentials are already updated in the .aws/credentials file, we need to configure the service config file for Fluent Bit and set the path to this credential file (see this for reference). To do that, fire up a terminal and edit the fluent-bit.service file located at /usr/lib/systemd/system/fluent-bit.service.

You need to add the following line after [Service]:

[Service]

Environment="AWS_SHARED_CREDENTIALS_FILE=/home//.aws/credentials"

Next, let’s update the config file with Input and Output plugins as follows:

[INPUT]

Name cpu

Tag fluent_bit

[INPUT]

Name kmsg

Tag fluent-bit

[INPUT]

Name systemd

Tag fluent-bitWe are taking inputs from three different sources — cpu, kernel, and systemd.

Our output configuration should look like this:

[OUTPUT]

name stdout

match *

[OUTPUT]

Name s3

Match *

Bucket fluent-bit-s3-demo

Region us-west-2

Store_dir /tmp/fluent-bit/s3

Total_file_size 10MWe have two outputs here—one will write all the logs onto the screen (a basic debugging technique), and the other is the S3 plugin. We need to include the name of the S3 bucket and the region where the bucket is. Please remember to update the Bucket and Region based on your own setup.

We also need to specify a Store_dir which basically stores the logs for a duration before pushing it to S3. If the fluent-bit service stops for any reason, the logs will be saved here and pushed to the S3 bucket once the service restarts. This ensures you don’t lose any logs.

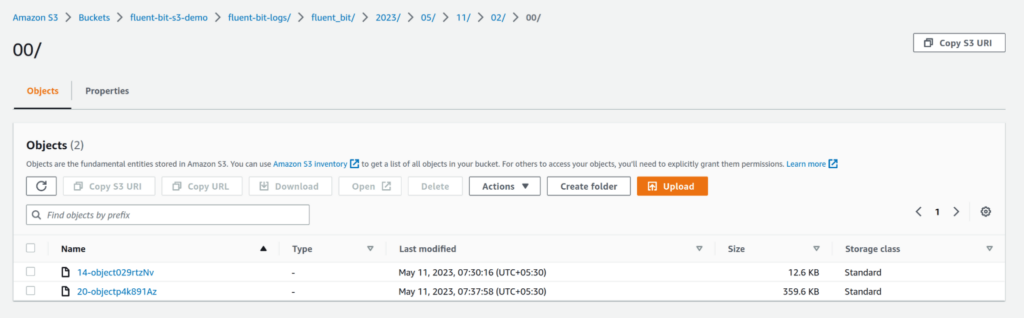

See your logs in S3

After you’ve saved the config file, run the fluent-bit service. Navigate to the S3 bucket, and you should see folders being created with the name fluent-bit. Within the folder, you’ll see subfolders based on year, month, date, and time and eventually the log files themselves. You can configure this by going through the S3 output configuration parameters.

Whitepaper: Getting Started with Fluent Bit and OSS Telemetry Pipelines

Getting Started with Fluent Bit and OSS Telemetry Pipelines: Learn how to navigate the complexities of telemetry pipelines with Fluent Bit.