Shifting from manual human-driven workflows to AI

For more than two decades, monitoring and observability workflows have remained largely unchanged. Even as systems scale and complexity explodes, the experience hasn’t evolved to match. Engineers pore over dashboards, chase down alerts, and manually decide what’s worth investigating.

As one customer put it, “It’s daunting for our users to stare at 70 million metrics and figure out ‘where do I look next?’”

Even advanced capabilities—like anomaly detection, correlations, and outlier views—stop at analysis. The user still has to recognize the meaningful deviation and choose where to begin.

That’s starting to shift. The industry is transitioning from manual, human-driven workflows toward AI-led, agentic ones—where automation takes on active decision-making roles.

Why are we in this transition? As adoption of coding agents and code automation tools increase, the speed of shipping and volume of changes is outpacing human-driven operations. To keep up, AI agents must also help handle more diagnosis and remediation, allowing engineers to focus on higher-order work.

The journey: Why the transition period matters most

Adopting agentic workflows isn’t an overnight transformation. It’s a journey—and, in our view, teams must pass through a transitional (guided) phase before safely reaching autonomous operations:

- Up to this point: Manual workflows—Engineers manually identify and investigate issues step by step using their observability platform and then manually remediate the issue.

- Current transition: Guided workflows—AI identifies potential issues and suggests hypotheses and next steps in the investigation, while engineers validate and manually remediate the issue.

- Future: Autonomous workflows—AI identifies the issues and attempts to remediate them; engineers supervise and intervene if required.

As we enter the transition period—where AI suggests and humans decide—the wins are immediate: faster insights, clearer next steps, and less manual toil. But this phase is more than a convenience—it’s a necessary step toward true autonomy. And trust must be earned.

Long term, we can’t ask teams to trade control for automation. We earn autonomy by proving confidence first—through clear reasoning trails, predictable behavior, safe failure modes, and rapid recovery paths. Scope expands only when both the evidence and practitioner feedback support it. That’s how guided workflows responsibly become agentic ones.

On this journey, the transition itself delivers value. As engineers review AI suggestions and note what works and what doesn’t, the system keeps learning. That not only improves future hypotheses but also turns “tribal knowledge” into shared context for other team members and the system itself.

Here’s the challenge: while this transition to AI-guided workflows is inevitable, the transition matters. The industry is moving quickly toward AI-powered observability solutions, but without the right foundations, we will only get tools that promise insight and deliver noise. To understand what actually works, we first need to examine what doesn’t—and why.

Why current AI approaches fall short

As the industry pushes toward AI-enabled observability, two approaches have emerged. Both promise to ease the investigation burden. Both fall short in critical ways.

Approach #1: Agents outside the platform

Agents running outside of the observability platform can access signals like alerts and the raw telemetry data through various APIs. But, raw telemetry only becomes useful when you add structure and context to show how things are connected.

Observability is no different to many other problem domains where these connections are best represented by a knowledge graph—which, in this case, links services to their dependencies and the infrastructure they run on. Agents running external to an observability platform struggle to recreate this knowledge graph representation and often have to act on the raw telemetry data, which lacks the structure and context required.

Approach #2: Platform features acting on standardized data

Even when AI is embedded inside the observability platform, the knowledge graph it builds is typically derived from the data generated by proprietary agents and their pre-configured integrations. But, the majority of meaningful context—especially in the application layer—lives in custom instrumentation. That creates a large blind spot right where insight matters most.

The common flaw across both approaches

Without an encompassing knowledge graph, LLMs are forced to “fill the gap” and they do so confidently. The resulting analysis often reads logical and well—that’s what LLMs are great at. But the results seldom point to the actual root cause in production. Engineers end up chasing red herrings that miss the mark.

So if both current approaches fall short, what does a better path forward look like?

The three foundations that enable trustworthy AI in observability

To move from guided workflows to full agentic automation, three foundational requirements must be met. Without these, AI remains confident, but unreliable.

Foundation #1: High-quality data layer

AI is only as good as the data you feed it. Yet observability data is inherently messy—it frequently contains inconsistent formatting and conflicting taxonomies. Without data normalization and enrichment, you end up with fragmented data. Data doesn’t align, relationships stay hidden, and symptoms can’t be tied to causes. This lack of alignment makes it hard for humans to reason about the data—let alone AI.

Anyone who has managed a complex system at scale knows that it is impossible to enforce consistency at data emission time, especially when each engineer has complete control over naming. Unfortunately, this is not a problem OpenTelemetry tackles—while it standardizes the protocol, it does not standardize data formats or taxonomy. A unified data layer solves this challenge by normalizing and aligning telemetry around shared meaning. This can be achieved via a combination of deriving, mapping and aliasing data dynamically after it has been emitted.

This foundation ensures that every engineer can access the data in the format they expect at query time, however, the data is also normalized and connected on the backend.

Foundation #2: A temporal knowledge graph

To reason about dynamic systems, AI needs more than structured telemetry—it needs a living model that connects both system data and human insight.

A temporal knowledge graph provides that model. It links the technical representation of the system with human input—the knowledge encoded in runbooks, incident notes, and resolution summaries. By combining these dimensions, the graph transforms disconnected data and human input into structured, queryable knowledge AI can reason with.

Unlike static service maps, a temporal knowledge graph continuously tracks how services, infrastructure, and dependencies evolve over time, which is critical for observability where the view of the changes over time are often more important than the current state. It also incorporates non-observability sources—deployments, config changes, feature-flag updates—alongside their related metrics, logs, and traces. Because many incidents are change-driven, connecting temporal context and change data to observability signals helps pinpoint causation, not just correlation.

While AI can traverse a well-structured knowledge graph, the issues are often nuanced. It’s easy to detect when an entire service or an availability zone is down. However, it’s much harder—and more useful—to detect when only a subset of requests, a specific user cohort, or a particular version of a service is impacted. Finding these issues requires deeper analysis of the underlying data properties and the traffic flows through the knowledge graph. This deeper analysis must be provided to the AI; it’s not something AI excels at natively.

Foundation 3: Transparency and learning over time

Black-box AI recommendations—no matter how accurate—don’t build the trust needed for teams to act on them confidently. Without understanding the reasoning, engineers can’t validate, adjust, or learn from AI insights.

Engineers should see the reasoning behind each suggestion. This means showing:

- What data was analyzed

- What anomalies and outliers were identified and which were ruled out

- Why certain hypotheses were suggested and ranked over others

Transparency enables human feedback, creating a loop that improves insights over time. That’s pivotal in production, where the same issues frequently recur. Each validation or correction teaches the system, making future suggestions more accurate and contextually relevant. This not only enriches the knowledge graph over time but also creates a shortcut: when the issue returns, the system surfaces the prior investigation to speed resolution.

The system may surface more context than any individual person knows, but it always shows its work. Thus, engineers at all levels can not only review and decide, but also learn from the reasoning and outcomes.

Introducing AI-Powered Guided Troubleshooting

For years, we’ve been laying the groundwork—clean, standardized data and our Temporal Knowledge Graph. So far, that foundation has powered Chronosphere Lens for automated service discovery/mapping and Differential Diagnosis (DDx) for queryless, correlation-driven troubleshooting. Now it unlocks AI-powered Guided Troubleshooting.

During any incident, our Guided Troubleshooting features automatically analyze and surface the most relevant signals, and suggest resolution paths grounded in evidence.

Each suggestion shows its reasoning, the data it considered, and the alternatives it ruled out. Engineers stay in control, able to validate, refine, or reject every step with full transparency.

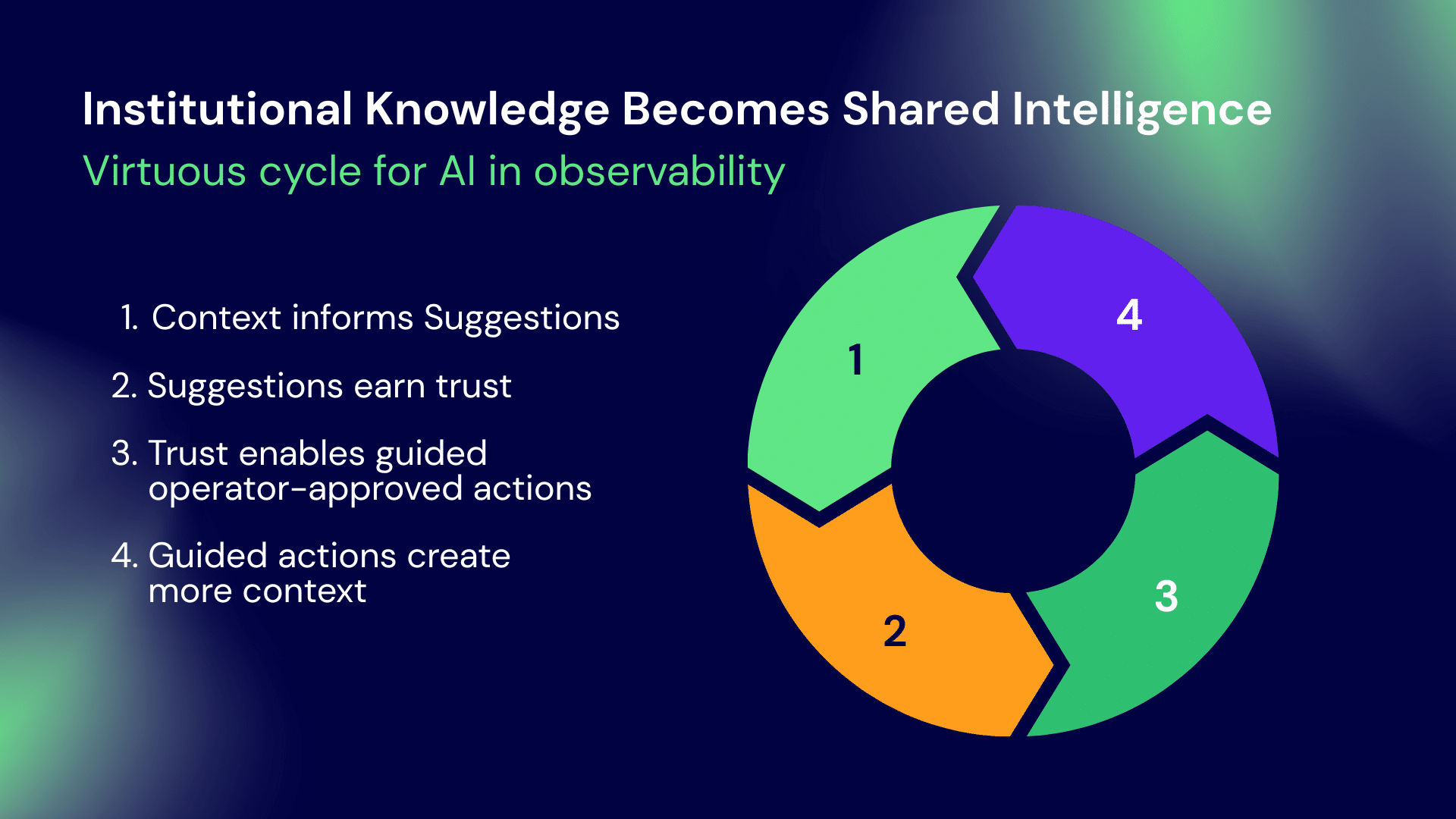

Every investigation feeds back into the Knowledge Graph, strengthening the system’s understanding and turning institutional knowledge into shared intelligence. Over time, this creates a virtuous cycle:

This is how guided workflows responsibly evolve into agentic ones—not through forced adoption, but through demonstrated value.

Step one in the journey toward autonomous observability

The future of observability will be faster, more reliable, and more human-friendly—not because humans are removed, but because AI finally has the context it needs to take on the work. After two decades of unchanged workflows, the transformation has begun.

“AIOps” has been promised for years. What’s emerging now is genuinely different. Past approaches focused on incident detection and summarization—without the deep analysis or root-cause reasoning needed for real operational decisions.

The question isn’t whether observability will become agentic—it will. The question is whether organizations will build that future on foundations that earn trust, maintain transparency, and empower engineers.

The organizations that will thrive in this transition aren’t those racing to deploy automation first. They’re the ones ensuring they have the foundations that make automation trustworthy, explainable, and genuinely intelligent. That’s the journey Chronosphere is on—and we’re just getting started.