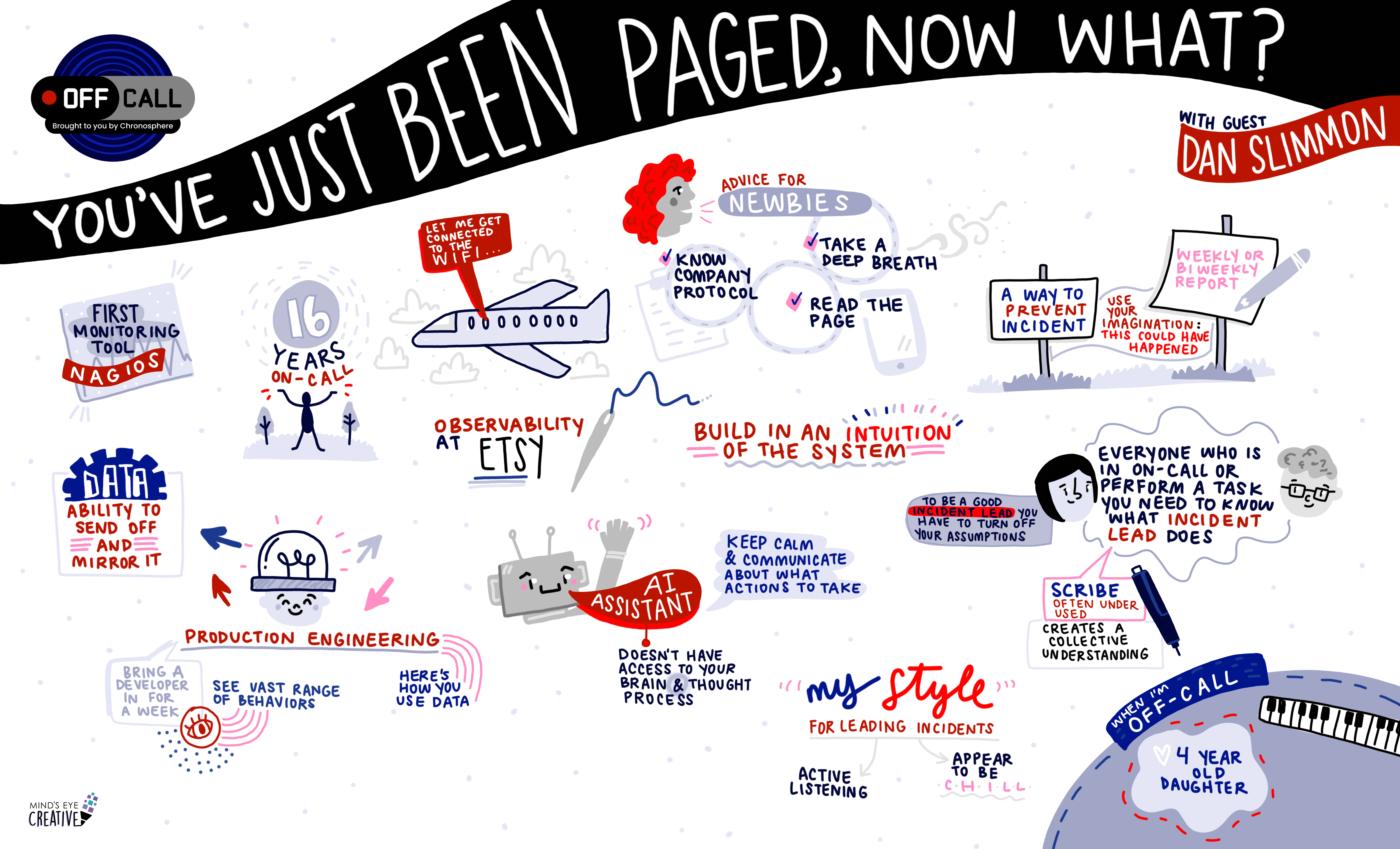

Off-Call with Paige Cruz: So You’ve Just Been Paged. Now What?

Featuring

Principal Developer Advocate

Paige Cruz is a Senior Developer Advocate at Chronosphere passionate about cultivating sustainable on-call practices and bringing folks their aha moment with observability. She started as a software engineer at New Relic before switching to Site Reliability Engineering holding the pager for InVision, Lightstep, and Weedmaps. Off-the-clock you can find her spinning yarn, swooning over alpacas, or watching trash TV on Bravo.

Infrastructure Engineer | Clerk

An DevOp and SRE for 16 years, Dan has led observability and incident response programs for rapidly scaling companies like HashiCorp and Etsy. He’s a tireless advocate for applying scientific inquiry and medical diagnostic techniques to the field of software operations.

Overview

Paige Cruz sits down with Dan Slimmon to explore the nuances of systems and incident response. They talk about the challenges of managing a self-hosted observability stack and Dan’s unique on-call experiences, like troubleshooting an incident from an airplane!

They also dive into the Production Engineering initiative at HashiCorp designed to immerse software engineers in proactively exploring observability data to gain a deeper understanding of system behavior. Of course we cover the significance of understanding roles in incident management, like incident leads and scribes, and how to identify your personal style of leading incidents.

Transcript

Paige Cruz: Hello! Paige Cruz here, and today I am really stoked to share my conversation with Dan Slimon, an expert in systems and incidents, and the man behind D2E, aka Down to Earth Engineering, your go to for learning scientific incident response. We chat about oh so many things, including the challenges of operating a self hosted observability stack, facilitating learning experiences for developers with the very cool production engineering rotation at HashiCorp, and what you can do to become a better incident lead.

I bet you’ll never guess the strangest place Dan has helmed an incident. Enjoy!

All right, welcome to Off Call, the podcast where we meet the people behind the pagers. Today, I am delighted to be joined by Dan Slimon, an incident response expert and educator. We’ll hop in with my favorite intro question, which is, what was the first monitoring tool you used and do you have fond memories of it?

Dan Slimmon: If we go all the way back to college there wasn’t any monitoring. The monitoring was just me looking at the server.

Paige Cruz: Meat based monitoring?

Dan Slimmon: Yeah, exactly. So the first actual tool I used was of course, Nagios. Which I sure did learn how to use. It wasn’t really good at anything in particular, but at least it was text file configuration that made things a little easier for me.

Paige Cruz: That has been a popular one. I have not actually ever pulled up the Nagios interface, but I hear of the traffic light, the red, yellow, green. That’s nice. There are some nice things they did there.

Dan Slimmon: Except I’m red green colorblind!

Paige Cruz: Oh my gosh.

Dan Slimmon: So it doesn’t even help me.

Paige Cruz: No. And they didn’t have a UI palette that you could toggle, no?

Dan Slimmon: No, I had to hover, I would hover over it with my mouse.

Paige Cruz: Oh my gosh.

Dan Slimmon: And tell me it had the little color name as a tooltip.

Paige Cruz: Well, hopefully today the tools that are provided, I’ve worked for a fair number of monitoring companies that have had user groups that had that same colorblindness, so we would test out different palettes.

So today, your options should be better.

Dan Slimmon: It’s a little better.

Paige Cruz: A little better. How many years were you on-call? I get the sense you have left the life of the pager behind you.

Dan Slimmon: Yeah I have, I’ve been working on jobs that it required me to be on-call ever since I graduated from college, so like 16 years.

Paige Cruz: That is a long time on the pager. Oh my gosh.

Dan Slimmon: You’re telling me.

Paige Cruz: I lasted six years before I burned out and said. No, no, this is not the life for me. I’m married to someone who’s on-call so I still get woken up at 3 a. m. but that’s just the life of being married to a software engineer.

Dan Slimmon: Same. I’m married to an emergency and critical care veterinarian. So she gets paged. Very often, I still get to hear her, one side of her incomprehensible conversation about medicine in the middle of the night.

Paige Cruz: The stakes are high there. I know a lot of folks can say, “Oh, what’s the risk if we have an incident? What’s the real worst case impact scenario?” And I always think you do not know how your customers are using your software and the creative ways that they found it to put in their own critical paths.

So we do need to take on-call seriously.

Dan Slimmon: I 100% agree with that statement.

Paige Cruz: So in those 16 years, do you have a standout time- the strangest time or place you’ve been paged that just really, you’re like, man, I got to rethink this whole career path.

Dan Slimmon: Sometimes you’ll get, escalated to, and because somebody else has some problem, they can’t get on the call.

And so you get escalated to – that’s when the really bad ones happen. So on a, you just sign onto the WiFi on the airplane, finally get it working and you’re missing like 10 messages about an incident, the whole site’s down and you’re trying, now you’re trying to fix it. Do a screen share over airport, airplane WiFi.

Paige Cruz: 30, 000 feet up there. I did not even think about incident response on airplane WiFi. That is, That’s a big incident if you’re getting escalated to while en route. Oh my gosh.

Dan Slimmon: I mean, if it’s gone on for long enough for anybody to notice that I’m on an airplane, it’s a bad one.

Paige Cruz: Yes. Do you have any on call advice for newbies, whether they are new to engineering or joining a new company? Because not every company practices incident response the same.

Dan Slimmon: You gotta know your company’s incident response protocols really well. Have them written down, write them out, post it or something on your desk so you don’t get confused and start like wasting time or calling in the wrong people into the wrong place. Everybody knows that .The best piece of advice I can give personally to people who are new to being on call is when you get that page, take a deep breath.

Paige Cruz: Yes. That is gold.

Dan Slimmon: Nobody’s gonna know. That’s your last moment before you’re gonna be leading that incident and you’re gonna take whatever emotions that you’re feeling at the point that you get paged into the incident with you. So take a deep breath, lets you like take a little step back from that wall of emotions and be ready to think rationally.

Paige Cruz: Absolutely. I don’t know that there is ever a stress free incident, but I do think getting all that oxygen up into your brain and taking that deep breath can give you literal breathing room. My reaction to getting paged was always, instant panic.

I assumed it was worst case scenario. And before I even read or let PagerDuty tell me what the alert was, I was like, everything’s got to be down. I’m going to be the only one. And you could really get chicken little and spiral. SoI love that advice.

Dan Slimmon: That’s my second best piece of advice which is “Read the page.”

I think it’s like very easy to see, Oh no, the website’s down you know, 500 errors and immediately go start the call and get on the call. Everybody’s going to expect you to be the incident lead and to know the most about what’s going on the call, there’s nothing higher leverage when you get that page than reading it word for word before you get on the call.

Paige Cruz: If you happen to work at a place that has untrustworthy alerts or unactionable ones, take another second to actually look at what that alert query is and if it is actually matching the intent of the alert with the results of that query. That has led me astray a couple times where I thought something was worse than it was and it turned out we just had a misconfigured monitor.

Still annoying.

Dan Slimmon: Somebody just changed the type of log messages that this thing emits and looks like it’s totally down.

Paige Cruz: There is no shortage of interesting scenarios that pop up during incident response. Before we recorded, we talked a bit about your time at Etsy and how there was a really great culture of experimentation.

For me as an Etsy user, I can imagine when I load that homepage, there is not just one experiment running, but dozens, maybe hundreds of experiments going on. So what was the approach to managing that visibility and monitoring to know if something went wrong on that homepage load?

Dan Slimmon: You have all the graphs that show the activity on the web page- is it up or down, is it fast or slow type graphs you could have an overlay. That would show you a vertical line, whenever a deploy happened, but also a vertical line, whenever an experiment started or stopped.

The thing is though, that there were maybe, like you say, there could be dozens or hundreds of experiments running at a given time. So you, if you were looking at that overlay and seeing all the experiments, you would just see. Vertical line, vertical line, vertical line.

Paige Cruz: Like a barcode.

Dan Slimmon: So it would be better usually to check the access log or the log. If you could find the request that generated the weird problem, which is another whole problem entirely, you can find the request that was weird. Then you can go check its request ID and see what experiments were applied to that user at that time in case the overlay was too much.

Paige Cruz: Did you guys have a custom in-house visualizer that you had built or were you sending these kind of event markers onto a vendor? I always love talking about other people’s monitoring stacks.

Dan Slimmon: I mean, Etsy was one of the first, this was all before I was there, but Etsy was like maybe the first company to start coming and like being very public about it’s in house observability stack looked like and what they were doing. And they were doing things that nobody else was doing at the time. So they had to build a lot of it, right? Etsy built StatsD. And they contributed a lot to Elasticsearch and Graphite, which which we used extensively but, it’s all open source tools run on our own hardware in our data center, right?

Paige Cruz: Oh, I love that. Very early on to the open source monitoring journey. Okay so visualization was done through maybe Grafana or?

Dan Slimmon: You would use, what did you, what did we use for the graphs? I guess we did use Grafana. Yeah.

Paige Cruz: I believe they have support for event markers, so that makes sense.

Dan Slimmon: We would draw a vertical line on there. I think in the early days, we maybe were using like the actual Graphite tool to display graphs, but that is what was not fun. That was janky. So I think we moved off of that pretty early.

Paige Cruz: That is what I hear. Although there are pockets of Graphite users still out in the wild today. And same with Nagios. I went to the website the other day and it is still a company that is running, offering their products. So there’s a use case for somebody.

Dan Slimmon: If you don’t overreach, you can stay in them and you’re reliable. You can stay in the market for a long time.

Paige Cruz: The reliability of monitoring and observability platforms is a very interesting area because I think where Etsy shines was putting a lot of operational excellence into running the in-house stack, which a lot of folks can discount, “Oh, it’s cheaper to self host.” Cheaper in what term? Dollar amount? Certainly not operator hours and keeping that thing up and running and scaling.

Dan Slimmon: It was constant. I was on the observability team for a while at Etsy. We were in charge of keeping the whole observability stack up and running. Just the amount of different use cases that we had to support and optimize for made it basically an impossible task, not to mention that we had all of our SOX compliance data stored in Elasticsearch, in this ancient version of Elasticsearch that no one was ever allowed to touch or upgrade because it had all the SOX data in it. So that became like 60% of the observability’s team job was just to troubleshoot this horrible Elasticsearch cluster.

Paige Cruz: Oh man.

Dan Slimmon: If you don’t have to do that kind of thing. If you’re just shunting all your data off to Datadog or something.

Paige Cruz: When I was doing observability engineering, I was managing vendors so I didn’t quite have the ” Oh God, I’m responsible if this thing goes down.” no, our vendors SREs’ are responsible if this thing goes down. Still not great for me and my team and my company to not have visibility, but it was a very interesting relationship to be on the other side of that client vendor.

Dan Slimmon: Yeah, it feels very different, right? I certainly wouldn’t advise anybody to run their own observability stack themselves today, unless there’s just a Kubernetes, what do they call it? A helm chart. If you’re early, like you can just pull in something basic and run it. But even then, like nothing’s easy to run at scale.

Paige Cruz: No. And I, the conversations that I like to have the lens on this is what is your business selling? What are they offering as products or services? If it is not a monitoring or observability platform, do you really want to be committing all of those engineering hours?

Because ops folks, these days are not cheap. Our time is very valuable. So would you rather have them working on scaling, hardening, making your actual product more reliable or futzing around with, “Oh my God, we’ve dropped metrics because we tipped over our collector. “

Dan Slimmon: Yeah, absolutely. I think the ideal is that you’re sending all of your data off to some third party that can make pretty graphs and that’ll do alerts and log searches for you, but that you can also mirror it somewhere else if you need to.

I’ve missed that at companies that were, say, for example, we are using Datadog and we’re just using the Datadog collector to send stuff to Datadog, that’s all it does is you don’t have the ability to like, take the data and pipe it into a script that can do some other kind of analysis that Datadog doesn’t do or something like that.

Every once in a while it’s really helpful when you’re troubleshooting a problem or trying to understand a certain failure mode, so I recommend like having some knob that you can turn to send the data over here too.

Paige Cruz: Yes, that’s the rise of the telemetry pipeline with things like FluentBit or even the pipelines within the OTel collector letting you dual route or tri route or whatever.

The game is totally different from, I think, when both you and I were on-call for monitoring systems, but it is no small feat to rip and replace your proprietary collectors and agents or re-instrument with new protocols and standards.

So I think as an industry we’ll be in this journey for a long time. The dream of change one config parameter and send your data to three places, If you are in that situation now, your company is like, cutting edge, good job, most of us are playing catch up.

Dan Slimmon: Yeah, I agree.

Paige Cruz: We collect all of this monitoring data. You worked really hard at Etsy to make sure that monitoring and observability systems were up and running. And a lot of folks turn to these tools during an incident.

I think you should be logging in every day and you should have a good finger on the pulse of what your part of the system is doing. However, most folks open up that tool during an incident. And incidents are not something we get formally taught how to participate in, or even how to navigate these different monitoring or observability tools.

Earlier off mic, we talked about a really exciting project you ran at HashiCorp, this production engineering volunteer rotation. Tell us a little bit about that. How did it get started? What were the goals and is it still running today?

Dan Slimmon: Thanks for asking. It was this thing called production engineering that we’d named it before Facebook came out with their thing called production engineering. It’s a different thing.

What we would do in production engineering is- throughout my career, I have been essentially been not so great at getting projects out the door on time because I always had on this corner of my eye, I had graphs of the system’s current behavior and I would be, I would, something would catch my eye and I’d be like, Whoa what’s up with that?

And I’d go figure it out and guess the bottom of it. And that has given me a really strong intuition into what kinds of behaviors, the system that I operate exhibits. So I wanted to transfer that to software engineers who maybe are used to like developing and software in a development environment, testing it in a staging environment, getting it into production does it look like it’s working, okay, next feature cause they’re like on a time crunch.

I wanted them to see the vast range of different behaviors that a complex system actually undergoes in production and how to reason about them. On production engineering, what we would do is on a weekly basis, some weeks we had somebody, some weeks we didn’t, but we would bring a developer in for a week and we would look together at the graphs and logs, whatever they want it to look at. And I would say, “All right where do you want to look?” Let’s look at the logs of this service that I worked on recently. Okay. So we go look there and then we just sort it, scroll it, zoom in, zoom out until we see something that makes one of us say, “huh, that’s weird.”

Paige Cruz: Famous beginning words, not famous last words. “That looks funky” is the start of a beautiful investigation.

Dan Slimmon: Exactly. That’s, it was like that inventor Röntgen, I think the guy that discovered x rays had left his candy bar near the machine that was generating these rays. And he came back it was melted. And he was like, that’s weird. That sort of thing. Eventually you get x rays that way. So the same thing happens to us we’re operating a system that is unlike any that follows rules unlike any other system’s rules. And we have to scientifically determine what rules it’s following under what circumstances by getting this feedback from the production behavior. So it’s super valuable. People learned a ton. We had repeat people coming back because they were so interested and found it so useful to see here’s how you use data to get to the bottom of production behaviors.

Paige Cruz: Learning experiences like these, I find like innately valuable, intrinsically valuable. I don’t think we should have to justify spending time learning on the job for skills and I guess just time to spend with your system that you won’t get during the week. However, leadership always likes to know what are we getting for our time and our money here.

Over the long period of time that you ran this, were there interesting patterns? If you had to say, here were the outcomes, here’s how you could maybe pitch this type of project at your company what could folks bring as data points?

Dan Slimmon: This is why it didn’t do it until I was pretty late in my SRE career is because it’s very hard to do the managing up of running a program like this. The way I framed it at HashiCorp at least was I framed it as a way to prevent incidents.

Essentially my reasoning was if you have an incident, you have a failure and you go do a post mortem on it and figure out what the causes what contributing factors led to it. Many of those empirically are things that already were true about the system before the incident started. If you had looked for them, you potentially could have found them. Which is evidence that if you look for surprising phenomena in the system before you have an outage and you use it to update your mental model of how the system works get that in sync with how things actually work and maybe make a fix, then some of those incidents just won’t happen. That’s the way I presented it to management.

I would write up like a weekly or some, or a bi-weekly little report that was like, Oh, we had this engineer and this engineer on rotation with us. We found this and this issue, like. Using my imagination I can paint you a picture of how things could have gone if we had not found these issues. Then nobody bothered me anymore about doing it because they figured it looks like we’re preventing incidents. So great.

Paige Cruz: Nice, that stream of reports of this is the outcome, not only valuable for managing up to leadership, but also for folks new to the company or folks who learn best by exploring how things break.

I would rather read your incident analysis than your documentation most of the time.

Dan Slimmon: Absolutely. There’s no other way I would argue. You can make a software system in however tight tolerances you want, make it exactly the way you want it to be and then once you deploy it into production, it’s subject to cost the whims of what random traffic customers are going to send to it and what’s happening on the network and what’s happening, what else is happening in your data center. It’s going to start exhibiting behaviors that you could not possibly predict before you go look at the production data and how it, and then the best production data is how the system fails or how it behaves in ways that the designers didn’t expect.

Paige Cruz: I often learned the most about my system when writing up my detailed postmortems and incident analysis. Oh, now that I’ve got to back up my claims here and actually find the data or get that real time system architecture diagram from a service map or whatever. Oh, there are some things in the hot path I didn’t even realize. This type of meeting, this learning experience taps into that problem solving nature, because just, some folks are motivated to build, some folks are motivated to solve problems.

When you open up production and you take a look at what the heck is going on, you will find more problems than you knew what to do with for fixing. So I really recommend that folks think about bringing this practice into their org, starting small even just blocking off that time for yourself.

The first thing I ever did when I would join a company is set aside time every week for me, just to go explore within the observability tool, the system. It got me a lot further than reading docs. I love documentation, but not everybody’s great at it. And if there’s not a strong culture of it in your organization, then the truth really lies within your observability system. So you should be checking it out.

Dan Slimmon: 100%. So to harp on your point a bit more from before when you go into production, you find way more problems than you could ever hope to fix all of them. And that intimidates some people when they first go to look at the data because you don’t know just looking at a huge array of log entries, which ish, which things are really possibly problems and which things are just noise. The only way out of that is through. You find the thing that looks the most surprising to you in the moment you dig in the code, you dig in the data, you figure out, you get to the bottom of it. And it’s either a real problem, and so you can go fix it, or it’s not, and you get to update your understanding of the system so that next time when you look for a surprise, you won’t go down that rabbit hole. Over time, by virtue of having to distinguish real problems from not real problems, you develop an intuition for the system that beats anything that you could achieve just by thinking really hard about the code.

Paige Cruz: At the whiteboard yeah, thinking about all the failure cases. Oh, totally. When I ran a similar learning experience but it was just targeted to one hour cause that was all I could get at my org. I would try to choose an alert that was flapping or just looked really noisy and, when there wasn’t an actual issue going on and I would make a lot of assumptions.

This is what’s happening, this is the fix to tune the alert, and once we finish up this hour, this alert will never bug us again unless it’s real. And every single time, not once, not twice, every single time that I, made a lot of assumptions about where the investigation would go and the results. I was totally wrong, and it really humbles you when you actually look at this data to say, Oh, what I think up here and what’s actually going on, that can be a very big gap sometimes.

Dan Slimmon: And it only gets bigger the longer you spend not looking at the actual behavior.

Paige Cruz: Absolutely. When it comes to incident response, especially at these large companies that you’ve worked for, there’s never really just one thing that’s going wrong.

A lot of incident response boils down to how effectively you can communicate and collaborate between multiple roles. There has been a lot of ado in the market about the role of AI and how it can or cannot solve all of our problems. When you think about well run incident response and the needs of the humans, what role, if any, do you think an AI assistant or features can play?

I’m trying to be more open minded about it these days, but you already know my bias towards humans need to lead the charge here.

Dan Slimmon: That’s great that you’re trying to be more open minded. I’m not really trying. I’ve seen a couple attempts to make an AI produce an incident summary or an incident analysis and it’s not there. I certainly wouldn’t want to listen to an AI tell me what to do on an incident call. I’m sure there’s legitimate things that you can do with it around the edges of the process, but what makes a team really effective at responding to an to an incident, like a high profile incident on a tight timeline is their ability to keep calm and communicate their own thought process to each other across team boundaries and across skill boundaries. Just really clearly talk about their conception of the problem and agree on action to take and why. That all that goes on in your head before it, like an AI doesn’t have access to anything that hasn’t come out of our mouth yet.

All that stuff all has to be practiced and perfected inside your own head. That’s what I like to focus on. I like to teach people like how to do incident response with no tools or with with as few tools as you can have. And that’s possible, I know, cause I’ve done it so once you learn to do that, then you can start picking away at the pieces of the response that really a human doesn’t need to do and you can outsource those to some tool, AI or otherwise.

Paige Cruz: I always think, okay, maybe it’s helpful for status page updates. And then I go, that is externally facing customer communication… that is probably bound by an SLA. Do you and your lawyers really want to leave that up to chance? Or do you want to spend an hour thinking through here are, our three different severity levels, or here’s the different ways our product can be impacted. Let’s make a quick template for each of those. And there you go. Then you’ve got it forever and you didn’t even need to outsource it to AI. I’m still trying to find that use case. I haven’t found it yet.

Dan Slimmon: And you still have a support, you have a support. Army, right? At your company, you have people who do customer success or support they know how to write status messages to customers. They do it all day in Zendesk. So they don’t need an AI to write that for them. You only need the AI if you don’t do that every day.

Paige Cruz: A lot of the times incident responseand processes tend to focus on the engineers, on the SREs, maybe up to executives if you’ve got the escalation paths figured out.

What are the other roles that we need to consider when doing incident response training or thinking about updating the process?

Dan Slimmon: Anybody who is in an on-call rotation needs to know what an incident lead is and what their responsibilities are. Anybody who might be called upon to, to perform some task in an incident needs to know what an incident lead is and what their, and at least that if you get called by one.

You should do what they say on the incident call. The other roles that I see as most useful are a primary investigator there needs to be somebody whose job it is to look at the technical and nitty gritty and figure out the coding part of the solution. Because otherwise, even if the incident lead does happen to have the same skillset as the, as that person, they can get drawn into the technical part of it and forget that incident leading is about common ground and communication and forget to do those things.

And then scribe.

Paige Cruz: Oh, I love the scribe. .

Dan Slimmon: I love the scribe too, but I think it’s often underused. People will tell the scribe like here are the events you need to write down, put them in time stamped little notes. Here, this happened, then this happened, then this happened, then this happened.

To me, the right role of a scribe is to preserve the collective understanding of the incident response effort. So the scribe’s main job, I think, should be keep an up to date incident summary at the top of the document that says, here’s the customer impact, here’s our best guess as to what’s going on and here’s maybe some other guesses that we have.

Then that puts the scribe into the conversation because they have to ask very useful questions to answer those questions.

Paige Cruz: I think that is the best part is it is a dedicated role to say, “Why? What is it? What does this mean to you? You just linked to this chart in, your monitoring tool and you sent a sad face emoji. What did that mean? Walk us through your thought process there. We’re not all drawing the same conclusion.” I’ve been in so many incidents where it’s very chaotic and three different teams are off thinking that it’s their part of the world and doing different investigations I’m sure I’m guilty of also just sending -uhh I don’t, I think I had pretty good incident practices, but I’m sure there was a time I sent a chart thinking, clearly, you all will understand what this means. And no!

While I try to stay level headed in an incident, I don’t know that there’s ever been a calm incident that proceeds, with everybody taking their turn to talk and everyone following the book. Most of the time, there’s something that’s gone haywire, or there’s a little bit of chaos in the mix.

Dan Slimmon: That’s right. And things break down very quickly. The collective understanding breaks down very quickly unless you have the scribe writing or somebody writing The current state down so that everybody can look at it, compare what’s in their head to what’s on the page and if there’s any discrepancies, have a conversation about that. That’s critical.

Paige Cruz: I love that. It sounds like we have a lot of very similar philosophies and processes around incident response, which can be rare because every single company has their own flavor of how to do it. And hopefully they’ve written it down. Not everybody has.

When you are educating folks on incident response what can they expect to learn? What can they expect to show up? What do they need to prepare to be a good student?

Dan Slimmon: I think anybody can be a good incident lead but you gotta turn off some of your assumptions from the very beginning about what that entails.

If I could give somebody homework before I teach them how to do incident response, it is think about your skills that you practice day to day in your job, which are different skills from what any other role at your company practices. Try to identify which of those skills are going to be most in line with your job as an incident lead and conversely, which skills an incident lead seems to need to have that are not part of your job.

Getting good at leading incidents is largely about developing a style that suits your strengths and pushes your disinclinations onto someone else that you can delegate that job to.

Paige Cruz: I like that your incident lead approach is based on your own kind of personal style and flavor. So if I hear you right, you’re saying that there is not one model for, this is how you absolutely need to act as an incident leader, but it’s more about understanding your role, your responsibilities, and matching it to what you’re doing, your strengths and then leaning on others to compliment the things you’re not so great at.

Dan Slimmon: Absolutely. There’s so many different kinds of situations that can arise that any, basically anything that your organization knows how to handle gets handled further up the chain. And anything that falls through is something your organization doesn’t yet know how to handle.

You have to be able, you have to be ready to deal as an incident lead with all kinds of weird pathologies, both social and technical. So you can’t be rigid. You have to be adaptive to the situation. And the only way to really to be adaptive is to follow your own instincts and inclinations as to how to deal with things.

You gotta be professional. You gotta be professional and serious.

Paige Cruz: Ground rules.

Dan Slimmon: But everybody knows you’re putting on, everybody’s performing a role, and that’s part of a role.

Paige Cruz: One last question on this section before we get to you and off call. How would you describe your style of leading incidents?

Dan Slimmon: I tend to be almost so chill during an incident or appear so chill during an incident that like managers wonder if I actually like know how bad the problem is and are we doing something? And I fake that, of course, you have to, that’s what you do. My style is largely about active listening to what everybody else is talking about on the call and making sure that people are understanding each other and not talking past each other and that everybody’s getting the answers to the questions that they need. I’m a very like connecting this person with that other person type leader rather than a okay, I’m making an executive decision here’s the thing we’re doing right now. Which works really well for some people, but not my style.

Paige Cruz: Oh, totally. Homework for everyone listening, think through what your style is for leading incidents and what do you wish it was? Maybe come talk to Dan to take his course and learn a little bit more about that.

Dan Slimmon: Yeah, please do. The course launched is called Leading Incidents and it launched June 12th. If you’re listening to this, you can use the coupon code off call to get 20% off on the course. Go check it out at d2e.engineering/leading.

Paige Cruz: And we will put that link in the show notes.

Definitely check it out if you are thinking, oh my gosh, I’ve appreciated the values and the philosophies of running incidents and my company so doesn’t do it that way. Sign up your team. Bring more folks along on the learning journey because incidents are not a solo task. They are a big collaboration.

And this brings us to our final section about you and off-call. Of course, on-call dominates a lot of an SRE’s time, space, and energy and it takes us away from things we would rather be doing. What was on-call taking you away from? What are your hobbies and interests?

Dan Slimmon: I have a 4-year-old and I’m in charge of getting her to school and back and so on. That’s huge. She’s the light of my life. She’s the best. Her name’s Phoebe. The other things that I do when I have other time I play music, I play piano. I have since I was a kid. I play a lot these days. I’m playing a lot of like pop tunes. Your David Bowie’s, your Princes. That’s a lot of fun. And then, I read a lot. I read a lot of sci fi.

Paige Cruz: Ooh! Any recommendations? I just got into Octavia Butler.

Dan Slimmon: Oh, okay. Yes, I do.

We’ll talk after. Yeah. But but right here, that’s, this is the Red Mars series behind my head. That’s my favorite science fiction series I’ve ever read by Kim Stanley Robinson, if anybody’s listening, looking for a sci fi book, read Red Mars.

Paige Cruz: I do think there are a lot of common overlapping interests in the SRE space.

We’re all a little bit, we’re all a little bit built the same in that way. Sci fi, I love the music, and I am sure that the calm that you have during incidents helps a lot during parenting, because from what I hear, there are a lot of incidents whether it’s a missing juice box or I left my shoe at school, there’s a lot of incidents you got to respond to as a parent.

Dan Slimmon: There’s a lot of, you hear a loud noise from the other room and you, it’s just you take a deep breath, you incorporate what you know about the situation and you say, “um Phoebe, what was that?”

Paige Cruz: with that thank you so much for chatting today. And again, you can find Dan Dan’s course and more about him in the show notes below. Thank you for a lovely episode.

Share This: