Overcoming Observability Challenges in AI and LLM Environments

Traditional observability tools struggle to handle the scale, elasticity, and complexity of AI infrastructure and LLM applications, leading to increased costs, performance degradation, and slower troubleshooting.

Downtime and Latency Damage Customer Trust

Downtime or delays hurt customer trust. Many observability tools can’t meet AI workload demands, causing latency and reliability issues when it matters most.

Traditional Observability Buckles Under Data Load

AI and LLM workloads create huge, unpredictable data volumes. Training drives sustained load, while inference causes sudden spikes during real-time queries.

Runaway Costs Divert Resources From AI Innovation

Without mechanisms to optimize, shape, and filter data based on relevance and usage, companies pay for large amounts of redundant and low-value telemetry - draining budgets that could fuel AI research and development.

The Solution: Chronosphere Observability Platform

Chronosphere empowers AI companies to control observability costs and complexity in high-volume, unpredictable environments. By reducing data volumes by 84% on average, Chronosphere optimizes costs. It supports all telemetry types (metrics, events, logs, traces) from various sources (OpenTelemetry, Prometheus, Datadog, and more) at a scale necessary for AI workloads—with the ability to process over 2B data points per second. Chronosphere delivers the reliability you need with the cost control you want.

Scale Seamlessly With AI Workload Demands

Handle massive data volumes from training workloads and unpredictable spikes from inference operations. Process over 2B data points per second without performance degradation.

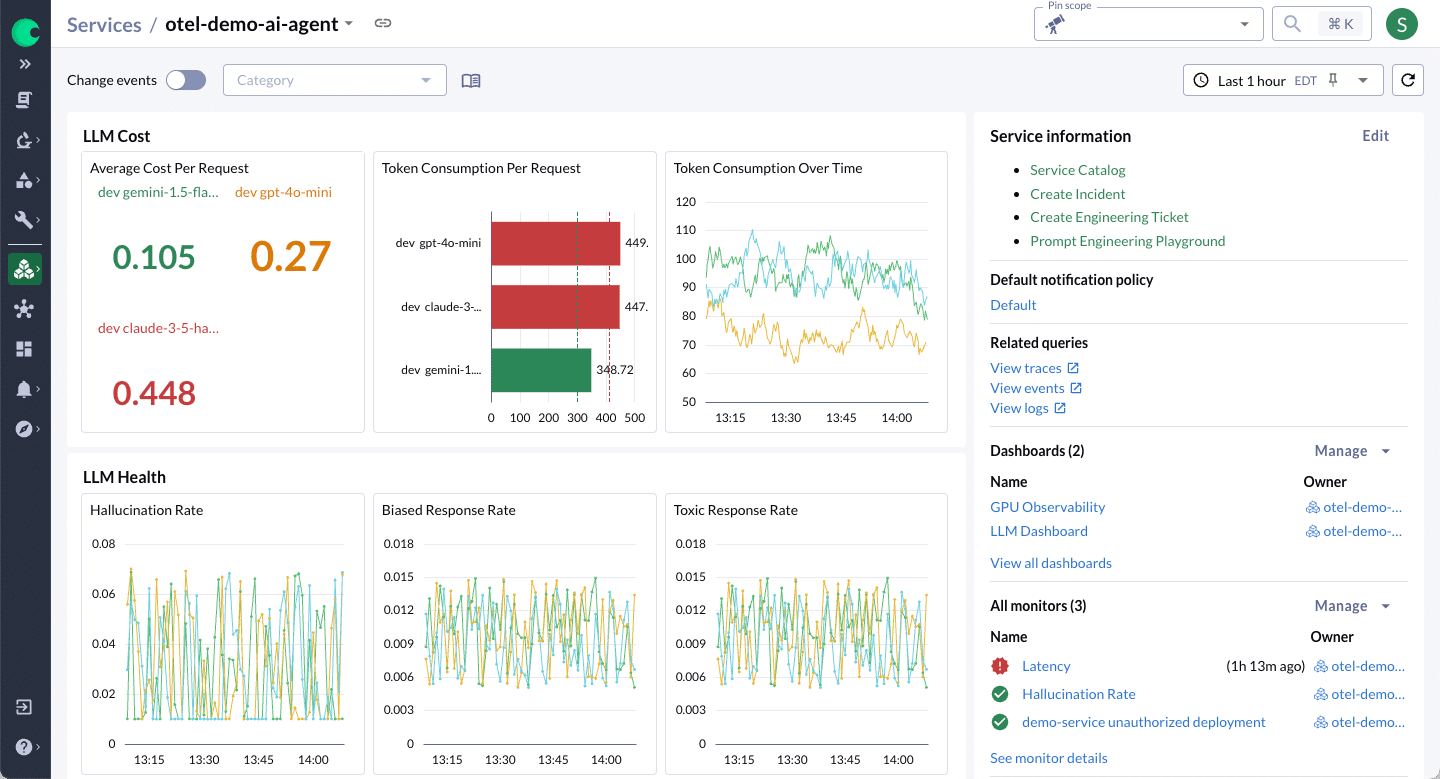

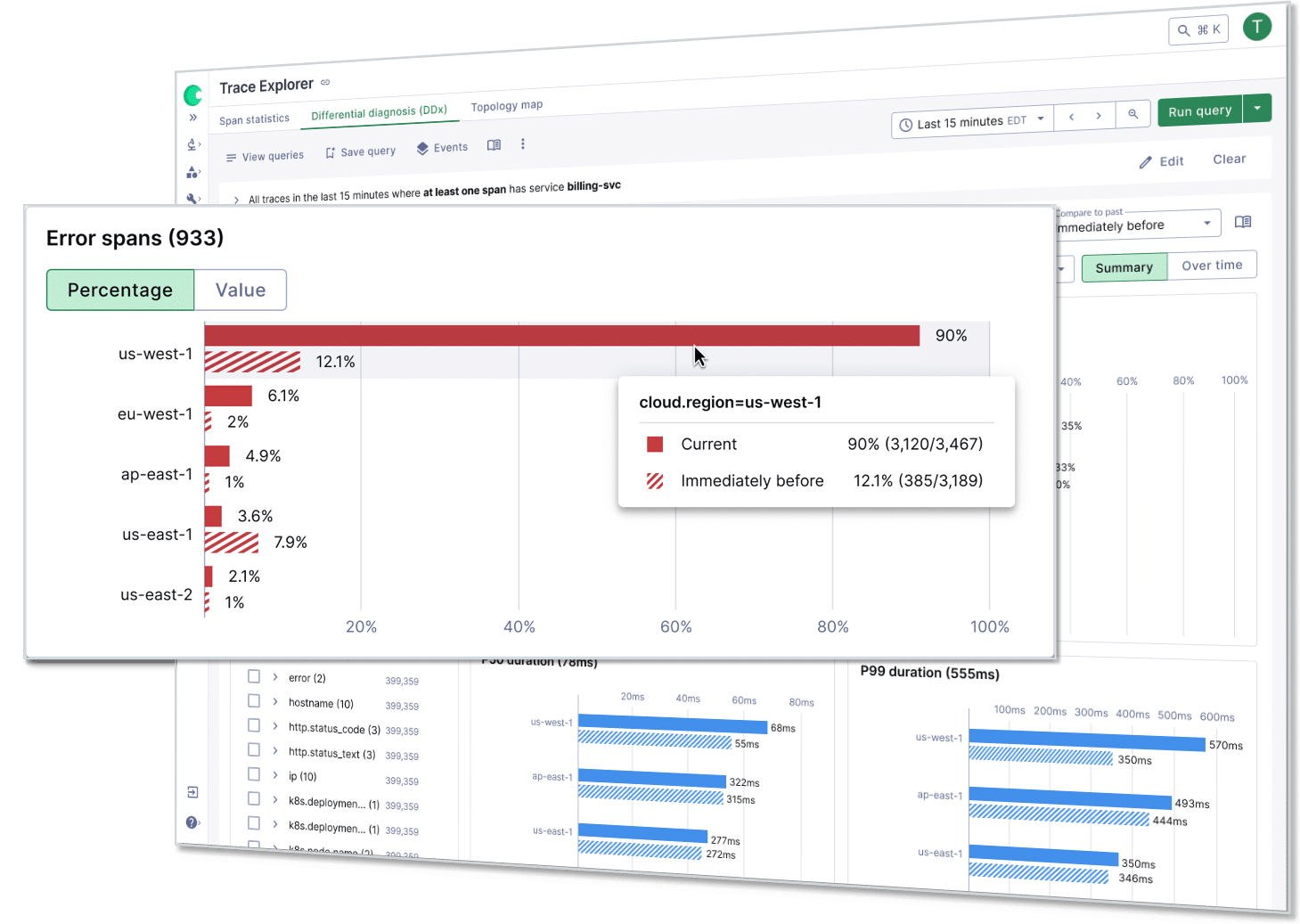

Resolve Issues Faster to Maintain AI Service Quality

Empower developers of all experience levels to quickly identify the source of service issues without deep system knowledge or complex query writing. Differential Diagnosis (DDx) surfaces potential problem areas through a simple point-and-click investigation process, eliminating reliance on system experts.

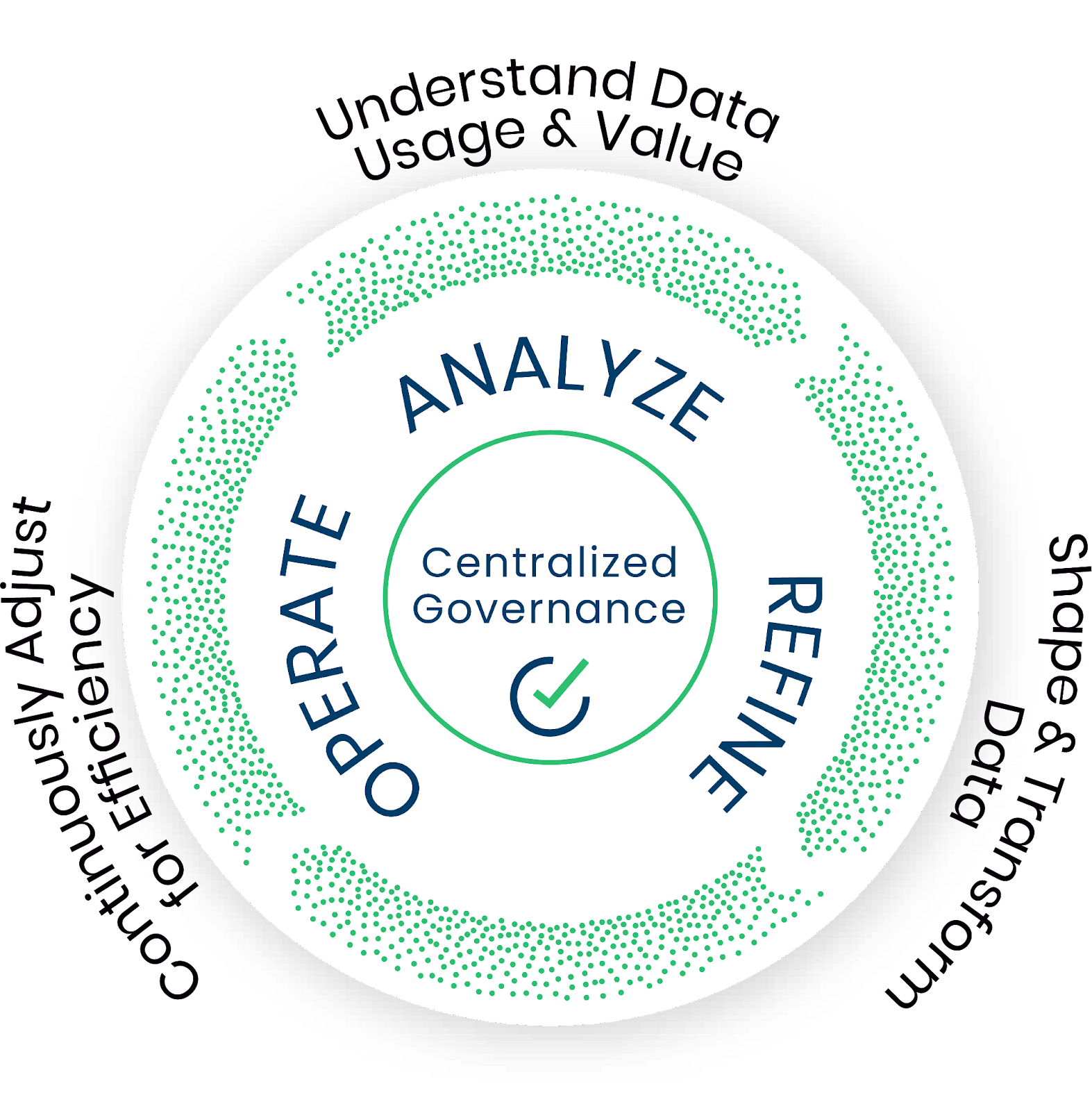

Control Observability Data Volumes and Costs

Pay only for data that provides value. Our Control Plane enables you to identify and keep only the data your team actually uses. Customers achieve an 84% reduction in telemetry data volume, on average.

Proven Results

84%

Average Telemetry Data

Volume Reduction

75%

Reduction In

Critical Incidents

99.99%

Historically Delivered

Uptime