Editor’s Note: This excerpt from the first chapter of the Manning Book: Platform Engineering on Kubernetes explores working with a cloud native app in a Kubernetes cluster — covering local vs. remote clusters, core components and resources, and common challenges — using real-world examples (e.g., the Conference application), plus open source tools and the latest Kubernetes best practices to help you tackle common cloud native challenges. To read the entire chapter, download the book here.

When I want to try something new, a framework, a new tool, or just a new application, I tend to be impatient; I want to see it running immediately. Then, when it is running, I want to dig deeper and understand how it works. I break things to experiment and validate that I understand how these tools, frameworks, or applications work internally. That is the sort of approach we’ll take in this chapter.

To have a cloud native application up and running, you will need a Kubernetes cluster. In this article, you will work with a local Kubernetes cluster using a project called KinD (Kubernetes in Docker). This local cluster will allow you to deploy applications locally for development and experimentation.

To install a set of microservices, you will use Helm, a project that helps package, deploy, and distribute Kubernetes applications. You will install the walking skeleton services which implements a Conference application.

Once the services for the conference application are up and running, you will inspect its Kubernetes resources to understand how the application was architected and its inner workings by using kubectl. Once you get an overview of the main pieces inside the application, you will jump ahead to try to break the application, finding common challenges and pitfalls that your cloud native applications can face.

This article covers the basics of running cloud native applications in a modern technology stack based on Kubernetes, highlighting the good and the bad that come with developing, deploying, and maintaining distributed applications.

Running our cloud native applications

To understand the innate challenges of cloud native applications, we need to be able to experiment with a simple example that we can control, configure, and break for educational purposes.

In the context of cloud native applications, “simple” cannot be a single service, so for simple applications, we will need to deal with the complexities of distributed applications such as networking latency, resilience to failure on some of the applications’ services, and eventual inconsistencies.

To run a cloud native application, you need a Kubernetes cluster. Where this cluster is going to be installed and who will be responsible for setting it up are the first questions that developers will have. It is quite common for developers to want to run things locally, on their laptop or workstation, and with Kubernetes, this is possible—but is it optimal? Let’s analyze the advantages and disadvantages of running a local cluster against other options.

Effective Platform Engineering

Platform engineering is more than just a technical discipline. It’s a way to unlock creativity and collaboration across your teams. Start building effective platform engineering strategies today.

Choosing the best Kubernetes environment for you

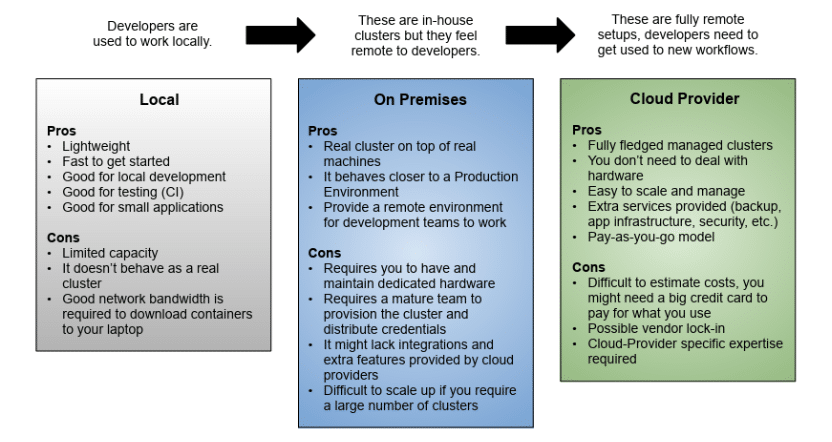

This section doesn’t cover a comprehensive list of all the available Kubernetes flavors, but it focuses on common patterns in how Kubernetes clusters can be provisioned and managed. There are three possible alternatives—all of them with advantages and drawbacks:

Local Kubernetes on your laptop/desktop computer

I tend to discourage people from running Kubernetes on their laptops. As you will see in the rest of the book, running your software in similar environments to production is highly recommended to avoid problems that can be summed up as “but it works on my laptop.” These problems are mostly caused by the fact that when you run Kubernetes on your laptop, you are not running on top of a real cluster of machines. Hence, there are no network round-trips and no real load balancing.

- Pros: Lightweight, fast to get started, good for testing, experimenting, and local development. Good for running small applications

- Cons: Not a real cluster, it behaves differently, and has reduced hardware to run workloads. You will not be able to run a large application on your laptop.

On-premise Kubernetes in your data center

This is a typical option for companies with private clouds. This approach requires the company to have a dedicated team and hardware to create, maintain, and operate these clusters. If your company is mature enough, it might have a self-service platform that allows users to request new Kubernetes clusters on demand.

- Pros: A real cluster on top of real hardware will behave closer to how a production cluster will work. You will have a clear picture of which features are available for your applications to use in your environments.

- Cons: It requires a mature operations team to set up clusters and give credentials to users, and it requires dedicated hardware for developers to work on their experiments.

Managed services Kubernetes offering in a cloud provider

I tend to be in favor of this approach, because using a cloud provider service allows you to pay for what you use, and services like Google Kubernetes Engine (GKE), Azure AKS, and AWS EKS are all built with a self-service approach in mind, enabling developers to spin up new Kubernetes clusters quickly. There are two primary considerations:

- You need to choose one cloud provider and have an account with a big credit card to pay for what your teams will consume. This might involve setting up some caps in the budget and defining who has access. By selecting a cloud provider, you might be in a vendor lock-in situation if you are not careful.

- Everything is remote, and for developers and other teams that are used to working locally, this is too big of a change. It takes time for developers to adapt, because the tools and most of the workloads will run remotely.

This is also an advantage because the environments used by your developers and the applications that they are deploying are going to behave as if they were running in a production environment.

- Pros: You are working with real (fully fledged) clusters. You can define how many resources you need for your tasks, and when you are done, you can delete them to release resources. You don’t need to invest in hardware up front.

- Cons: You need a potentially big credit card, and you need your developers to work against remote clusters and services.

A final recommendation is to check the following repository, which contains free Kubernetes credits in major cloud providers: https://github.com/learnk8s/free-kubernetes

I’ve created this repository to keep an updated list of these free trials that you can use to get all the examples in the book up and running on top of real infrastructure. The figure below summarizes the information contained in the previous bullet points.

Kubernetes cluster: Local vs. Remote setups

While these three options are all valid and have drawbacks, in the next blog, you will use Kubernetes KinD to deploy the walking skeleton in a local Kubernetes environment running on your laptop/pc. Check the step-by-step tutorial to create your local KinD cluster that we will use to deploy our walking skeleton, the Conference application.

Keep in mind, this tutorial creates a local KinD cluster that simulates having three nodes and a special port mapping to allow our Ingress controller to route incoming traffic that we will send to http://localhost.

Download the ebook to explore installing the conference application with a single command.

Frequently Asked Questions

How should I learn Kubernetes and cloud native applications?

Use a hands-on “break things to learn” approach: get applications running immediately, then experiment by intentionally breaking them to understand how they work internally.

Should I run Kubernetes locally on my laptop?

No – local Kubernetes creates “but it works on my laptop” problems because it lacks real network round-trips and load balancing that exist in production clusters.

Where can I get free Kubernetes resources to practice?

Free credits repository: https://github.com/learnk8s/free-kubernetes

A Buyer’s Guide to Modern Observability for Cloud Native

Dive deeper into cloud native observability—download our buyer’s guide to modern observability.