Why GenAI matters now for platform teams

As PETech [an imaginary company created by the authors that is facing problems with efficiency around practices deploying and operating software to production] continued its Platform Engineering journey, it encountered the complexities inherent in optimizing cloud infrastructure due to many options available, such as virtual machines, serverless, and Kubernetes. Each option presented cost, performance, scalability, and operational overhead trade-offs.

Using GenAI to optimize infrastructure choices

Historically, selecting the optimal infrastructure often requires extensive manual analysis and experimentation, leading to inefficiencies and suboptimal resource utilization.

In the case of PETech, it used Gen AI to streamline and optimize its cloud infrastructure decision-making process to address this challenge. By implementing a GenAI model trained on PETech’s specific application patterns, historical usage data, and infrastructure requirements, they developed a solution that could dynamically predict the best runtime configurations for each application.

This model leveraged the Large Language Model (LLM), which generated insights to evaluate various infrastructure choices against multiple criteria, such as:

- Cost efficiency

- Performance benchmarks

- Scalability potential

- Operational simplicity.

Drawing from concepts highlighted in recent research, including the 2024 advancements in GenAI for cloud optimization, PETech’s approach included using Retrieval Augmented Generation (RAG) techniques to retrieve real-time information on cloud services and pricing. This contextual awareness enabled the model to recommend the most cost-effective and high-performing infrastructure setup tailored to each application’s unique workload characteristics.

As a result, PETech achieved a 30% reduction in infrastructure costs and a 40% improvement in application performance.

AI across the SDLC: From idea to operations

GenAI’s impact on PETech extended beyond infrastructure optimization, influencing various stages of the Software Development Lifecycle (SDLC) by automating and enhancing traditionally manual processes. According to the latest industry findings, GenAI’s ability to process and generate natural language has revolutionized requirements generation. LLMs can automatically translate user inputs, business goals, and functional requirements into well-defined user stories and technical specifications.

From requirements to architecture

PETech leveraged this capability to streamline the requirements-gathering process. By inputting raw customer feedback and high-level business objectives into their GenAI system, they generated comprehensive user stories and acceptance criteria, drastically reducing the time spent on manual documentation and improving alignment with stakeholder needs. In the architectural design phase, GenAI demonstrated its ability to enhance design quality and reduce time-to-market. Modern research has shown that when trained on architectural patterns and best practices, LLMs can generate detailed architectural diagrams, data models, and design blueprints.

PETech integrated GenAI into their design workflow to automatically create data flow diagrams, ERDs, and system architectures based on project requirements. For example, when embarking on a new microservices-based project, PETech’s GenAI model analyzed the desired system functionality and automatically proposed an architecture that included service boundaries, API endpoints, data storage solutions, and security considerations.

This expedited the design process and improved the usability and maintainability of the resulting product by ensuring consistency with industry best practices.

The figure below shows a high level view of the SDLC process and where the GenAI tooling will fit in.

AI-assisted development and review

The development phase benefited significantly from GenAI’s code generation and review capabilities. Using LLMs trained on PETech’s codebase, open-source repositories, and industry standard coding practices, PETech’s developers could automatically generate boilerplate code and scaffolding for new projects.

According to the latest literature in platform engineering, AI-augmented coding tools have proven effective in enhancing developer productivity and reducing coding errors. PETech saw this firsthand as their GenAI model provided real-time code suggestions, reducing the amount of boilerplate code developers needed to write manually.

Furthermore, the GenAI system conducted smart code reviews, offering immediate feedback on code quality, potential bugs, and adherence to coding standards. This automated review process accelerated code quality assurance, reducing the time to identify and fix issues by 50% and enabling developers to focus on more complex problem-solving tasks.

Legacy modernization and CI/CD automation

GenAI also played a pivotal role in modernizing PETech’s legacy codebases, a challenge common in large enterprises. Refactoring monolithic architectures into more modular and maintainable structures is typically time-consuming and error-prone.

However, using LLMs trained on architectural refactoring patterns and best practices, PETech’s GenAI system could analyze the legacy code, identify tightly coupled modules, and recommend decomposition strategies. This enabled the platform engineering team to break down the monolithic codebase into manageable microservices, facilitating smoother deployments, easier maintenance, and more efficient resource utilization.

Recent platform engineering research emphasized that AI-driven code refactoring capabilities are crucial for organizations seeking to modernize their technology stack without disrupting current production systems. In addition, PETech adopted GenAI’s advanced capabilities for streamlining operational aspects like continuous integration and delivery (CI/CD).

GenAI tools were integrated into their CI/CD pipelines to automate the generation of test cases, execute performance benchmarks, and monitor system health. For instance, LLMs could identify gaps in test coverage by analyzing the codebase and suggesting missing unit tests, reducing the likelihood of defects slipping into production.

PETech’s operational teams also leveraged GenAI for predictive scaling and anomaly detection, using AI-generated insights to anticipate traffic spikes and adjust resource allocation in real-time, thereby maintaining system performance and reliability.

The advancements at PETech illustrate the profound impact of GenAI in platform engineering, enabling organizations to optimize infrastructure, streamline SDLC processes, and enhance overall efficiency. By incorporating GenAI into its platform engineering strategy, PETech improved its operational metrics and established a scalable, adaptable platform that can evolve with the changing technological landscape.

The convergence of GenAI and platform engineering, as seen through PETech’s journey and supported by the latest research, underscores the potential of AI-driven methodologies in transforming the future of software development and infrastructure management.

Enhancing LLMs with Retrieval-Augmented Generation (RAG)

One of the significant challenges in applying LLMs within platform engineering is ensuring that the generated solutions are contextually relevant and accurate to the organization’s specific needs. LLMs are powerful, but their responses are sometimes generic or lack the contextual nuances needed for complex engineering tasks. ‘

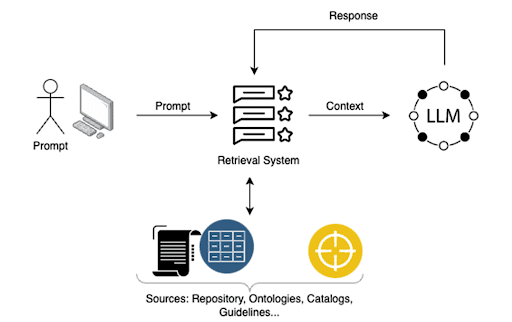

Retrieval-augmented generation (RAG) addresses this challenge by enabling LLMs to retrieve information from external sources, such as knowledge bases, documentation, ontologies, and guidelines, enhancing generated responses’ quality and contextual relevance. This approach ensures that AI-generated solutions are accurate and aligned with the organization’s specific standards, best practices, and operational requirements.

Why RAG matters for accuracy and governance

RAG can significantly improve the decision-making process in platform engineering by incorporating domain-specific knowledge into the LLM’s output. For instance, PETech integrated RAG into their GenAI models to enhance the quality of generated solutions. They created an ecosystem of internal knowledge repositories, including documentation on architectural patterns, design principles, coding standards, deployment guidelines, and operational playbooks.

When an LLM is queried for generating architecture diagrams, code suggestions, or design documentation, the RAG mechanism retrieves relevant information from these repositories, ensuring that the generated solutions are precise and contextually aligned with PETech’s established practices.

This integration of RAG into the GenAI model allowed PETech to tailor outputs more accurately, improving the precision of automated code reviews and reducing false positives in anomaly detection.

In the figure below, you can see how the prompts are better qualified using RAGs:

Operationalizing RAG: ontologies, runbooks, and telemetry

A crucial aspect of RAG’s effectiveness in platform engineering is its ability to incorporate ontologies and structured data into retrieval.

Ontologies define the relationships between concepts within a domain and provide a structured framework for understanding the LLM’s context. By leveraging ontologies, PETech’s RAG-enabled LLMs could understand and classify domain-specific terms, technologies, and practices, allowing the models to generate more sophisticated and contextually aware responses.

For example, when generating a solution for microservices architecture, the LLM could use ontology to understand the relationships between services, communication protocols, and deployment strategies, leading to more coherent and applicable architectural diagrams and guidelines.

Furthermore, RAG can access a wide range of documentation and guidelines that drive the quality of generated results. This includes developer documentation, API references, compliance guidelines, and best practice manuals.

At PETech, the RAG mechanism was designed to pull from an extensive repository of internal and external documentation sources. For example, when developers needed to integrate a new cloud service, the RAG-enabled LLM would retrieve relevant API documentation, cloud provider best practices, and PETech’s internal guidelines for cloud adoption. This ensured that the generated code snippets, deployment scripts, and configuration files adhered to industry standards and PETech’s internal policies, reducing the risk of misconfigurations and security vulnerabilities.

RAG’s ability to enhance the LLMs’ training process is another critical advantage.

By narrowing the information space to contextually appropriate data, RAG ensures that LLMs focus on high quality sources during training and inference. This refinement process leads to more consistent and reliable outcomes. For instance, rather than training the LLM on a vast, unstructured dataset, PETech curated a collection of high-quality documents, including design documents, post-mortem analyses, and operational runbooks. These documents were then used as part of the retrieval process to inform the LLM’s outputs.

As a result, the LLM was able to generate solutions that were accurate and reflective of PETech’s accumulated knowledge and experience, enhancing the overall quality and relevance of the AI-generated insights.

Organizations can leverage LLMs more effectively by incorporating RAG into platform engineering workflows, generating innovative and practical solutions. RAG enables the creation of an intelligent retrieval system that continually evolves as new knowledge is added to the repositories.

PETech’s GenAI models were always up-to-date with the latest architectural patterns, coding standards, and operational guidelines. When a new microservices pattern was introduced, or compliance requirements changed, the RAG mechanism ensured that this new information was available for retrieval, thereby maintaining the relevance and accuracy of the LLM’s outputs.

Preparing for the next step: Improving the quality of results

GenAI performance is largely a function of training methodology and data quality. For platform engineering, effective LLM fine-tuning requires vetted, domain-specific datasets—codebases, architectural artifacts, infrastructure definitions, and operational telemetry. The core challenge is calibration: choosing appropriate epoch budgets and applying transfer learning without over- or under-fitting.To learn how to ensure the quality of Gen AI-generated solutions through training in an efficient way, download the rest of the book.

FAQs

What are the main benefits of using GenAI in platform engineering?

GenAI streamlines infrastructure decision-making, automates requirements generation, accelerates architectural design, and augments code generation and review processes. As a result, organizations can reduce costs, enhance performance, and improve efficiency across the entire software delivery lifecycle.

How do Large Language Models (LLMs) improve the software development lifecycle (SDLC)?

LLMs enable automation of user story creation, architectural diagrams, and code generation. They allow engineering teams to translate high-level objectives and customer feedback into actionable requirements and technical specifications, accelerating development while maintaining quality and alignment with business needs.

RAG is an approach that allows LLMs to retrieve information from internal or external knowledge sources to produce more accurate and contextually relevant responses. In platform engineering, this means AI-generated solutions align better with organizational standards, documentation, and best practices, improving code quality and reducing misconfigurations.

How can GenAI accelerate modernization of legacy codebases in platform engineering?

GenAI models trained on refactoring patterns and best practices can analyze monolithic architectures, identify tightly coupled modules, and recommend decomposition strategies. This helps teams migrate to modular microservices architectures with reduced manual effort and risk.

What role does GenAI play in continuous integration and delivery (CI/CD) for platform engineering?

GenAI automates test case generation, performance benchmarking, and real-time system monitoring within CI/CD pipelines. It also enables predictive scaling and anomaly detection, allowing teams to proactively maintain performance and reliability as workloads change.

Effective Platform Engineering

Platform engineering isn’t just technical—it unlocks team creativity and collaboration. Learn how to build powerful, sustainable, easy-to-use platforms.