What is the collector?

Chronosphere is a cloud-native metrics monitoring solution built on top of M3 that’s designed to reliably and efficiently ingest, store, and query or alert against metrics at massive scale. However, in order to get started, you need to send your metrics along to our backend via the Chronosphere collector.

Note: this blog assumes a basic understanding of Kubernetes and terminology related to Kubernetes (e.g. pod, manifest, DaemonSet, etc.). While most terms are hyperlinked or explained below, you can also use the Kubernetes glossary as a resource if needed.

The Chronosphere collector is responsible for ingesting metrics from a customer’s various applications and then sending them along to the Chronosphere backend. It is the only Chronosphere software deployed in customer environments.

In most use cases, Chronosphere customers use Kubernetes to manage their cluster(s), which have pods running on each node. Based on the customer’s use case, there are multiple ways to deploy and configure the collector within a cluster. For example, a customer can choose to run one or many collectors within a cluster or node. Or they can choose to deploy a collector for an entire node or for a single pod within a node depending on their use case(s).

How does it work?

The collector utilizes Kubernetes and Service Monitor informers to pass along the latest information on the pods within a node or cluster to the collector’s processing layer. With this information, the collector will know which applications or pods to get metrics from, and will use scrape jobs to collect these metrics.

Scrape jobs use a pull based model to scrape Prometheus metrics from the various applications. Prometheus metrics can also be scraped using the information or metadata generated by pod annotations. For StatsD or M3 metrics, the collector exposes User Datagram Protocol (UDP) endpoints, which use a push based model to ingest metrics from their respective applications.

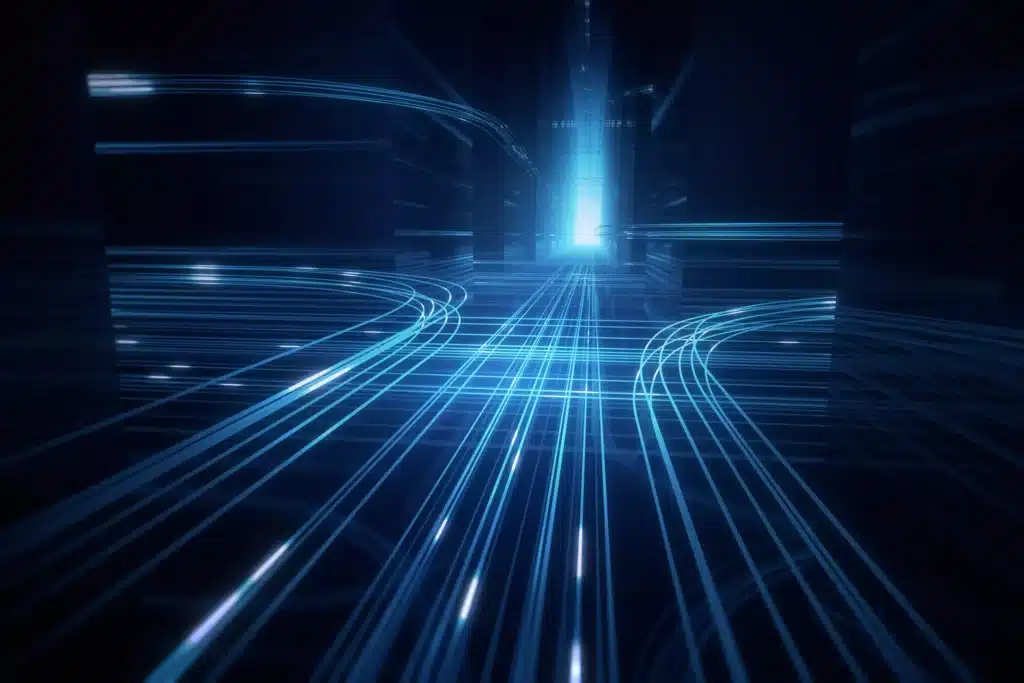

Once a scrape job or UDP endpoint has collected metrics, the collector will send them to the Chronosphere backend via the Chronosphere metrics ingester. From there, customers are able to query and alert against their metrics. See the below diagram for a high-level overview of the collector’s architecture and flow of metrics from a Kubernetes cluster to the Chronosphere backend:

Let’s now take a step back and look at the three ways a customer can deploy the collector in their environment(s).

Three ways to deploy the collector

- The first way to deploy the collector is as a DaemonSet. With this approach, one collector is deployed in each node of a cluster. This is the recommended deployment because if a node or collector were to go down, only the metrics corresponding to that node would be lost. The DaemonSet deployment also uses a pod template to set memory specifications for each collector so that resources are equally distributed across nodes in a cluster. This approach is best suited for standard or routine metrics that are regularly exposed across a handful of applications. In general, deploying the collector as a DaemonSet is the easiest to manage.

- Another way to deploy the collector is as a sidecar. This means that the collector is run as a companion application within a pod by adding the collector configuration to the manifest for the respective application. This approach is typically used for pods with applications that generate large volumes of metrics where having a dedicated collector can help the pod to operate more efficiently. This also makes it possible to collect metrics from a specific application or pod within a node if needed. Sidecar deployments can be more challenging to manage than DaemonSets, however, as each application with a sidecar collector requires its own manifest. It’s also important to note that while deploying a sidecar can control resource consumption, targeting only certain applications or pods, it can also lead to disruptions to all containers within a pod if something were to happen with the collector (e.g. a collector out of memory (OOM)).

- Finally, you can deploy the collector as a Kubernetes Deployment. This approach deploys one collector across multiple nodes. By pre-determining the number of collectors and their allocated resources, this approach allows for greater control over resources compared to sidecar and DaemonSet deployments. However, we don’t recommend this deployment type as it requires additional overhead to manage and control how the metrics load is split across the collectors within the Deployment.

While these are all different ways of deploying the collector, they are not mutually exclusive. In other words, you can have various deployment types within a node or cluster. For example, you may want a DaemonSet deployment to scrape standard metrics from all nodes in a cluster. But you might also want a Sidecar deployment for one of the pods or applications within a node that produces more business critical metrics at a large scale.

But how do you then prevent double scraping if there are multiple collectors deployed in a node? The main way to ensure this doesn’t happen is to remember how you “mixed and matched” your deployments, and to explicitly set the collectors to target only what you want them to. Adding annotations to metrics from each pod or node can help with this, but monitoring the metrics volumes from your various collectors is the most effective way to understand whether double scraping of your metrics is happening or not.

What’s next for the collector?

As with any product, we are always looking for ways to improve the collector and one of our primary sources of feedback comes from our internal usage and testing of the collector. We are constantly monitoring the status and health of our customers’ collectors, as well as testing any new deployments of the collector via scenario tests from Temporal. Our customers deploy the collector in many different ways, and these scenario tests allow us to re-create and test their various approaches to ensure everything in production is operating as intended.

In terms of the roadmap for the collector, we are continuing to add more tests and to improve the UX for our customers. This includes additional functionality around troubleshooting or debugging when managing multiple collector deployments, as well as around reducing resources needed to run the collector. If you’re interested in learning more about Chronosphere and the collector, please reach out to [email protected] or request a demo.